I have to do a script that’s able to load, 100k objects in scene. I have read somewhere that you can achieve it using particles. But is there anyway to do it without using particles?

You can manipulate the scene as you want through the Python API. But that many actual scene objects is stretching it in terms of interactive performance and things like selecting objects in the outliner.

Do you really need that many individual objects, or do you merely need the same object duplicated 100k (which will be easier using a particle system)?

Yeah a particle system solves the problem in case if the objects are duplicated. But I need to be able to edit the objects individually.

So far I have achieved loading scenes around 35k objects using Collection Instances, but if I try to stretch the number even a bit further the object creating process becomes exponentially slower. I even tried using the Vertex Dupli method, but I ended up running into the same problem.

You can try to divide your scene in multiple blend files, then link a collection with multiple objects, for example each linked collection with 30k objects, that way you could be able to work with it I think.

Sounds resonable, just out of curiosity each .blend file has a limit of memory?

nope, there is no memory limit AFAIK.

But it has a deps graph that will cycle over ALL the objects some times, and that will make it slow, by using linked collections you avoid that.

We do it that way to work with super big scenes

Also another thing that will improve is saving/loading times, since your work scene will be lightweight because it won’t really contain all those objects.

Thx!!! imma try this out

I hope it works out for you

I just started coding, and i have a question, this cannot be done only using the multi threading module right?

https://docs.blender.org/api/blender_python_api_2_64_1/info_gotcha.html#strange-errors-using-threading-module

I will have to use multiprocessing python modules to mange the main process and the proccess modifying the temporaly .blend file, right? Or is there any workout for this

I don’t fully understand what you mean, maybe @sybren can tell you something, but I think more details will be needed to fully understand what you are doing

Multi-threading or multi-processing can be useful when your script needs to do a lot of work. However, with 100k objects in memory, it’s Blender itself that’ll be struggling. What is your goal? Why do you need 100k objects at all?

Im working with really big scene that holds “Venues” with “Seats”. Sometimes the numbers of Seats can go up to 70k. Even though right now i have an addon that “can” loads scenes with around 70k seats it takes like A LONG TIME. Once everything is loaded moving thought out the scene is still manageable using instance collection.

I have noticed that even memory reached at around 400mb even the creation of empty using bpy.data.objects.new("empty",None) starts to slow down. That’s why i thought about using multi-processing module in the main process to create a temporary .blend file which in this new one we will create my venues in steps, so there’s never a lot of objects in memory in the temporary file. And that the end of the process append all the created objects from the temp file to our main process .blend.

Does that sound reasonable?

PD: I just tested but multi-threading on 1 process and the result is even slower. I guess it makes sense since the in the documentation it says:

Note

Pythons threads only allow co-currency and won’t speed up your scripts on multi-processor systems, the subprocess and multiprocess modules can be used with Blender and make use of multiple CPU’s too.

I would certainly consider using instances for this. Not every seat has to be its own individual object. The seats are probably arranged in sections, and each section in rows. I would make a collection for a row, then instance that a bunch of times to create the sections. Such a section would also be a collection, which can then be instanced across the venue.

Each bpy.data.objects.new(…) call has to check for name collisions, and this requires scanning all the already-existing objects. This is why it is slow with that many objects.

It’s not Python itself that’s being slow here, it’s the complexity of your blend file that’s slowing things down.

I would certainly consider using instances for this. Not every seat has to be its own individual object. The seats are probably arranged in sections, and each section in rows. I would make a collection for a row, then instance that a bunch of times to create the sections. Such a section would also be a collection, which can then be instanced across the venue.

I think I’m already using instances for this:

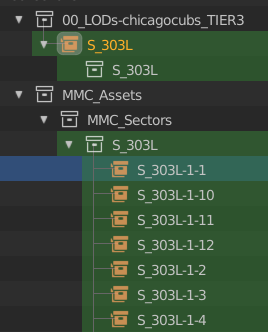

For example S_303L is a instance of a collection which contains Instances of a collection with it’s seats.

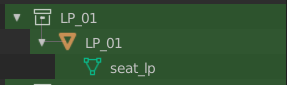

And each seat for example S_303L-1-1 uses a instance of a collection in this case LP_01 where the real object is situated. Am i doing this wrong?

PD: Actually by using thread and creating objects by batches the performance when down from 200s of creation to 160s, my main question is if i go multi-processing i can push this even further right?

OK, so a quick “dumb” question from an old man:

If you are building a stadium, or auditorium, why don’t you use an overall shape for blocks of seats, then use an image texture to show the seats, with a bump map. If you have a zoomed in view showing say the first row of seats next to the camera, just make them real meshes. The look is the same in the finished render and the overhead is minimal.

Cheers, Clock.

@clockmender I was thinking something similar. I must be a dumb old man too

I couldn’t possibly comment… ![]()

Hahaha the question is not dumb by any means, i’m deveouping this for a company that do need a level of detail of being able to edit every single seat as a entity rather than just a block of seats. They already use what you mentioned above.

Just use Clarisse then, it’s designed to handle billions of instances.

Silly question, do you have the instances in bounding box mode?

Also, have you tried to create them as hidden objects? (I’m not sure about this last one)

Anyways, 100k objects should not be so problematic, it’s not such a big scene, @sybren what do you think is the performance bottleneck in blender here? (Just opinion, not solution, I’m wondering where can be the slowdown in Blender)