I’m actually working on a proposal for this. I’m no indentured coder, much less with C++, but my last project I had… 3D simulated raindrops that had to be scattered in both time and along a surface.

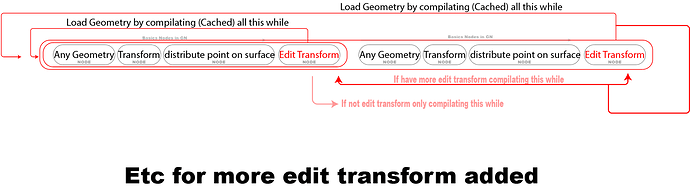

I got around it by using the alembic cache modifier, and using modulo in an expression to loop a set of frames and retime (yes, time compression and stretching), and after I had a looping animation, I could put a geometry node over it, and copy the loop over the surface. but then they were all instances of one thing, all the frame data at once. It was only 8 frames long, compressed to 4 frames, so I ended up having: 12 variations of raindrop (half were the even 4 frames, half were the odd 4 frames), and 4 timings for each one, from first to fourth frame, for a total of 48 simulations. And I needed a separate collection and geometry node scatter setup for every frame, because each frame needed to persist till frame*3.999999+collectionframe.

yeah it’s really cumbersome and slow, and some modifier attributes couldn’t be accessed in bulk. it needs automation.

Ah, I see, I didn’t consider that- but it still mostly falls within my understanding of the simulation being a separate datablock, and separately addressable/reusable as a thing.

I also in some recent testing noticed some kinds of input boxes in modifiers don’t respond well to mass alt-inputs. is there a legitimate reason this happens? Geometry nodes is all about making things procedural and more automated, and I noticed in alembic cache inputs, you cannot use alt to link multiple objects to one cache file at once. This would be an issue for, say, if you had dozens of explosions in a shot, but they were just the same one using geometry nodes to rotate and vary them.

But the bake button or filepath to bake to are separate from that.

Now this opens up a bit of a can of worms- if you have multiple simulations in order, do they all appear, or are they simulated as evaluated? While I do like this, There’s the issue that simulations have super complex sets of inputs and I can scarcely imagine what it would be like to have to input all the mantaflow options though the modifier viewer.

I believe modifier/sim stuff is getting quite complicated and answers will be hard to come by, be lieu of simulations currently being a separate menu for what is basically a real option-heavy modifier, as I understand it.

I think a “field viewer” would be useful. It can often be quite difficult to debug

at the same time, it’s something that a preset node group would be ideal for.