This is just full of misconceptions.

Node trees only get confusing when no encapsulations are used. It’s same as not using folders or smart materials in SP and having one stack of dozens of layers with several filters and mask filters on each.

I actually ditched SP partly for the reason that layer based system often got too messy too fast. It’s easy to make something complicated, but then it’s also often the case you spend a lot of time finding what exactly you need to tweak to get rid of that one particular smudge you don’t like. Unless, of course you invest time into making those layers clean, properly encapsulated and properly named. But you can, and should do the same in terms of nodes.

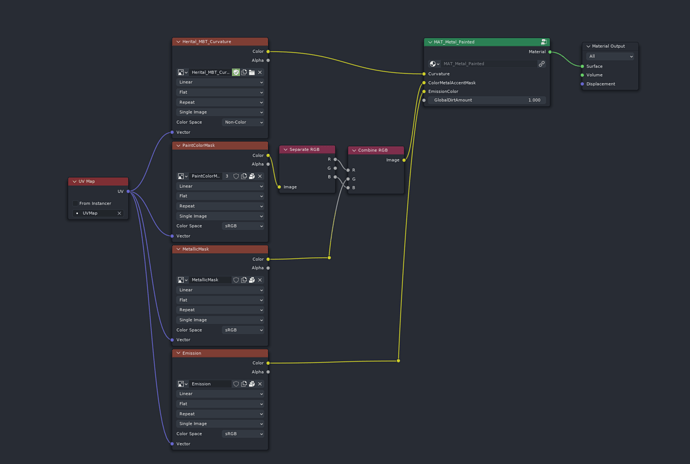

I can speak from a first hand experience since I am making a PBR texture sets for a game engine assets, and I replaced SP with Blender to do that. Here’s an example of the workflow:

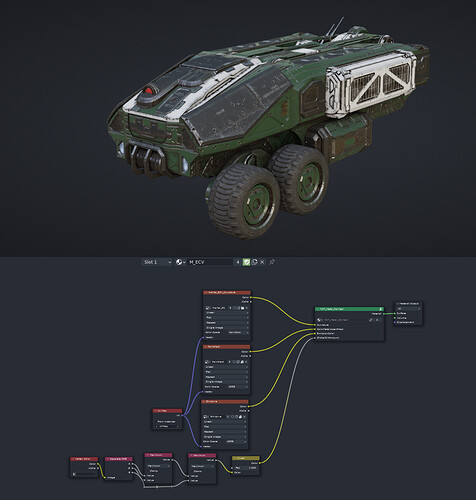

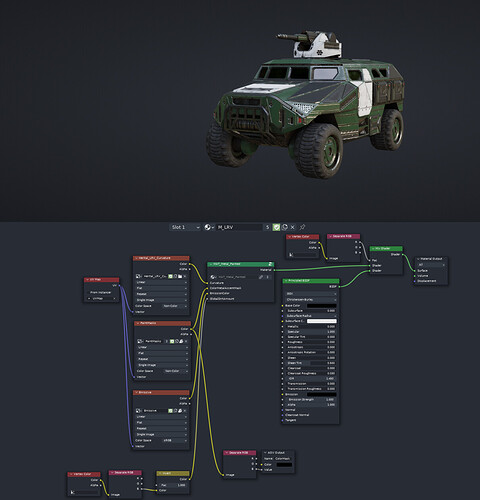

I am making a set of units for an RTS game (RTS Prototype - Game Development - Epic Developer Community Forums):

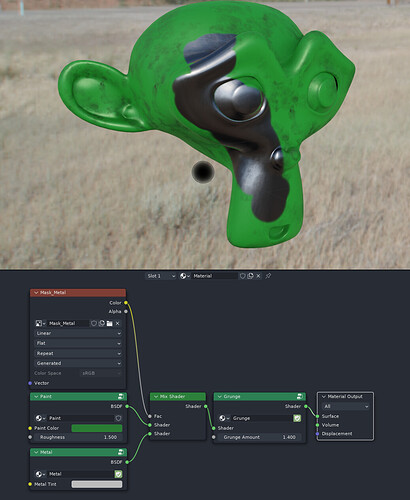

The shading is a typical complex material comprised of several different material types, masked with various edge wear, grunge and concave area dirt accumulation, combined with hand painted areas.

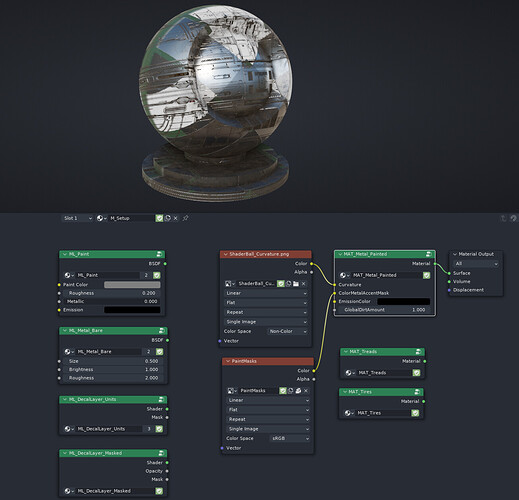

Thanks to Shader Nodes ability to encapsulate, I was able to achieve workflow as clean, if not cleaner than what I could with SP. The top level material, assigned to the tank above looks like this:

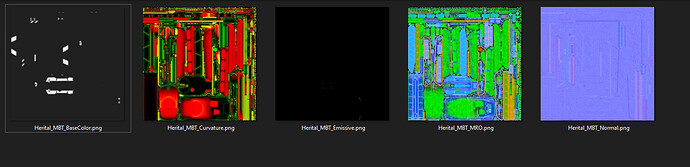

It’s just one material, an abstraction of the “look” of all the vehicles for the given game faction. The top texture is just a Convex/Concave edges map, masking out convex and concave areas for procedural effects, encoded in R and G channels:

It’s automatically baked with a single click script I’ve made.

The next texture is a RGB packed texture containing masks for the Paint, Exposed metal parts and the accent/faction colors.

The last texture is just emission RGB texture for glowing components:

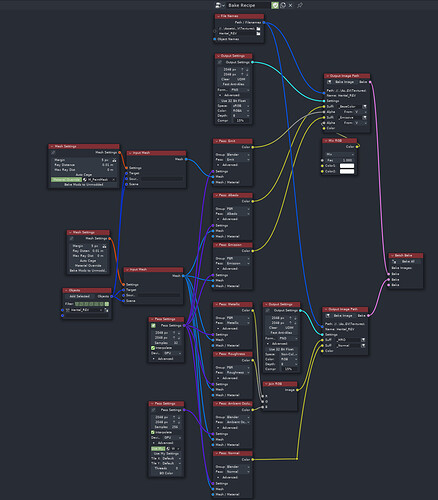

The main material does not actually reside in the tank file. It resides in a linked file, which is shared across all the units of given faction. So if I decide that all units of the given faction will be using blue metal, I can just change it in the linked file, and once I rebake all the textures, I have changed the materials for all the units at once.

The central material file looks like this:

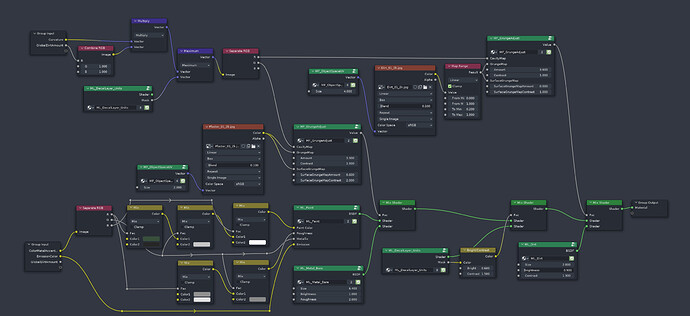

It has a shaderball for material testing, the main material group for vehicles, and on the left, the more fundamental abstracted materials that material group is built out of. Inside the vehicle material, there is no confusing noodle network. Just another, clean, lower level of abstraction:

In this level, the more fundamental PBR materials are combined into a final material assembly for the vehicles. The input channels of the RGB mask textures are interpreted as desired material properties.

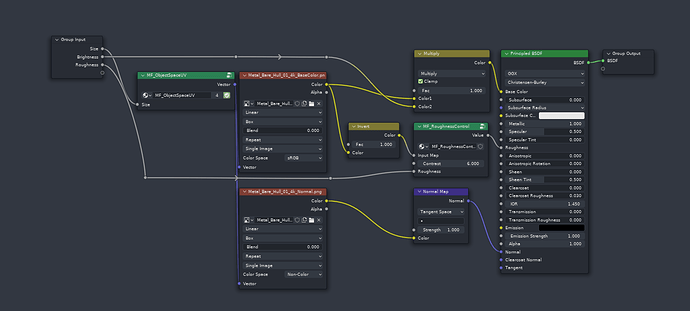

And then, at even lower level of abstraction, the basic materials themselves are found, once again in a very clean way, no confusing spaghetti:

This is metal for example:

Thanks to this workflow, creating a new vehicles using the exact same material style is trivial:

The same applies for buildings. Once I get the model done, All I have to do is to bring in the main building material node group, create one image texture for RGB masks to paint on, and paint areas where I want green paint, black paint, metal, or accent color. At the peak, I was able to make about one building a day, from scratch to finish, including the modeling:

For baking, I use BakeWrangler addon which allows me to pack the any texture/shader output I want into any channel of any output texture:

And with just a single click, I can always get a packed texture, in the same way I could in SP:

Obviously, there are several issues:

- Baking texture map such as curvature should be easy, right now it’s hard. This is not a problem of lack of layered texture painting. This is a problem of very poor texture baking system in Blender. That needs to improve much more.

- Packing and baking the output textures requires third party addon to be usable. Once again, getting a working texture baking system is IMO much more important than layered texturing system.

- Node group encapsulation should be advertised much more than it is now. Hopefully Geometry Nodes will aid that.

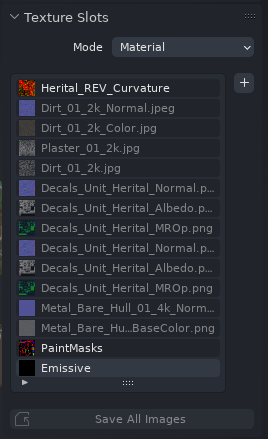

- Texture Paint mode should work better with Shader Editor. Image texture nodes should have some button which if clicked, will start painting directly on that texture in the viewport. This panel in particular:

Is currently just one giant, poorly designed source of user errors.

- To have full SP-like PBR texturing capability, Blender needs the nodes I mentioned above, which would allows is to modify only specific channels of the PBR struct anywhere along the shading networks. Right now, we can only mix the entire PBR structs. We can not choose to for example multiply only the base color or mix only the normal channel.

But all in all, I could never even dream of achieving this degree of flexibility and workflow cleanliness with SP layer based workflow. Just the mere fact that I can share live materials between different files, and therefore update materials in arbitrary amount of files at once is great.

The biggest problem here is that since, unfortunately, SP is currently the best we have, in terms of PBR texturing, most of us are thinking inside the constrained box of workflows we know from SP, while with just a small amount of usability improvements, Blender could supercede SP in almost all ways.

,

,