But it exists !!!

Maybe, I just wanted to make this clear, the importance of being able to access data of ANY result or part of the tree, not just pre-defined things

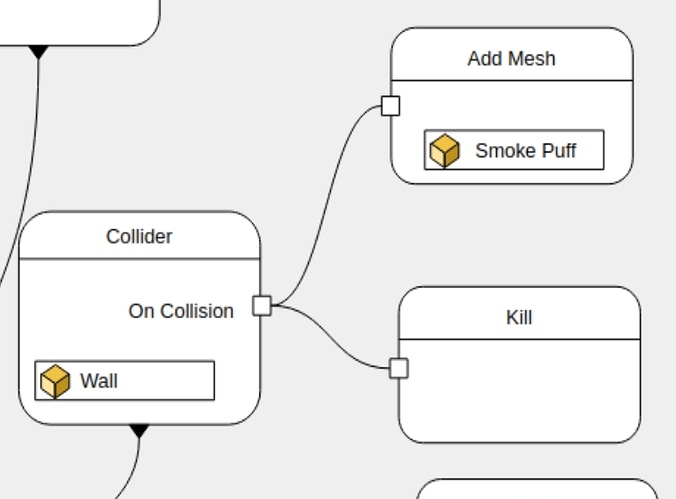

I am still rather concerned about this. The idea that a simulation can trigger many callbacks that immediately executes a chain of logic that can do things like adding new meshes to the scene sounds like it will create a debugging nightmare. The user should be able to deferred batch process these contact points for a specific itteration and say trim them out feed them into the initial points of the another solver/instancer/frame feedback loop instead of doing work on them one by one immediately. Also, if we go the route of events without a robust debugging solution they will be impossible to work with at scale. Even then existing solutions for debugging this type of logic will not scale… Having to breakpoint millions of particles is not a realistic option.

Noob question here regarding this same “On Collision”, for a while I was looking for ways to make “load/play a sound on dynamics collision” type of scenario.

Say a bunch of cubes colliding with the ground and producing “impact” sounds for each “registered” collision, and the answers were:

- somebody have to make an add-on for it.

- “fake” it in post.

- other obscure shenanigans…

But now I see this “On Collision” -> “add mesh” and “kill” and my brain is screaming “play sounds” “do something with the 3d speaker” “add some noise”, should I hold my breath about this “FINALLY” becoming a reality or should I just forget about it ?

Also, is there any plans for any kind of crowd simulation built in blender ? (logic, agents, behaviors, etc…)

That’s an interesting, REAL 3D sound creation capability. Maybe even play random sound from a group of sounds.

Another thing related to Voidium’s comment. The user’s idea “on-collision: play sound” seems rather intuitive right?

Well, this is actually quite a deceitfully complex problem. You probably really don’t want every rigid body to produce a sound whenever the physics engine signals it makes contact with something using this niave approach… Not unless you want to blow out someone’s headphones. (couple spheres = dozens of contacts every frame, 24 FPS, you get the picture)

In an interactive application which has (properly has) physics with interactive audio often there is a queue system which keeps a running list of contacts which have been sorted and culled by audible relevance and their lifetime during an interaction (hit, rolling, sliding, etc) which then manages streaming in and mixing the audio.

So in this case a point cloud of contacts points/pairs can still make a much more effective input to say a community (addon?) “3D Physics Audio” node than the event system as it has more insight into the broader picture of the resulting simulation.

Any nodes here will only be able to modify the pointcloud, not directly add anything to the scene.

Putting an Add Mesh into this graph is not the best example, but it is something that I expect will be possible through instancing on points as described in my previous comment.

In theory, if particles can instance objects, they can also instance speaker objects that play a sound. Or they could set some attribute on the points, which then later is used by modifier nodes to generate speaker objects. I don’t expect it to work in the first release of particle nodes though, but it should be compatible with the design.

Implementing a crowd simulation system is not a priority. Some basic crowd simulation may be possible to get working with the available particle nodes, but for native Blender support I would not expect it to happen soon, improving and nodifying other types of physics simulations will have higher priority.

Sesi have been replacing a lot of DOP functionality with pure VEX for a long time. in v13 they introduced a new particle system that was purely VEX, and in v14 they built their PBD/constraint grain solver using VEX.

Lastly their cloth, softbody, granular and general “unified” new physics tool Vellum is xpPBD based and done almost entirely in VEX.

This grants power-users direct access to modifying the solvers with code and tools they’re already familiar with from the modeling aspect.

Sure there are nodes that are using c++ and the sdk for performance reasons, but the fundamentals are the processing language that now powers not just most of their procedural modeling, shading, animation side of things but also their solvers and dynamic tools.

Im obviously a fan of both tools here and I think the Blender way is definitely not to out right copy anything. I think that would be a disservice to what Blender has achieved on its own, but there are ideas that have already been discussed and implemented at length in various other tools and domains.

PS. I realize Im not adding of value to the current discussion so I’ll be quiet

Any nodes here will only be able to modify the pointcloud, not directly add anything to the scene.

So the events system is only able to use a limited selections of nodes to modify the contents of the sim point cloud internally based on the change of state working on a single element? Users might find this a little limiting, understandable given what could go wrong, but limiting. Since the event system atomizes those points during execution as part of it’s design it would be difficult to do things like generate clusters/introduce spacialy aware behavior each itteration between (hardcoded w/ callbacks) steps of the solver. Unless I am missing something?

Also you would still need some kind of debugging infrastructure for the event system and that’s where we run into the same potential debug tool scalability problems as before.

At some point we may support plugging generic geometry nodes into the solver, that at every time step get a pointcloud as input and output a modified pointcloud.

However the plan is indeed to start with nodes that work on the level of individual particles, at least from a user point of view. Internally the nodes would likely be implemented by working on the entire array of particles with some particles being masked out. This is quite similar to shading languages (e.g. OSL) and the way SIMD is handled there.

In terms of debugging, if it’s still just executing nodes in a particular order on an entire pointcloud, it shouldn’t be harder to debug than any other node.

For particles it shouldn’t be much more difficult with that design, however it would be nice to see how events fit in the broader picture of the everything nodes project when users are able to expose them from preset nodes. I feel that as a mechanism events can still get out of control with it’s additional dimensionals of logic execution when the scope expands. It would be nice to see the particle graph be turned into modular update functions working on point cloud I/O.

I do love any plans or ideas for making Blender more powerful or flexible to achieve more advanced results than what is already possible. I think everyone problem wants that.

But having said that, I can’t help but wonder and ask, is this system, and the ‘everything nodes’ concept in general, still going to be simple enough and user friendly enough for average or new users to get to grips with? Are simple things still going to be quick to do?

The whole ‘Everything nodes’ system is great in theory and I’m keen to see where it goes, but take for example a simple use case like wanting to put a bevel modifier on a cube. Is that still going to be simple and quick to do after ‘Everything nodes’? Right now it’s just a matter of going to the modifiers, choosing ‘Add Modifier’ and ‘Bevel’, then adjusting some parameters.

Particles for example, it’s relatively straight forward to just create a particle system, choose some parameters and hit play, will it still be relatively quick and simple to achieve those kinds of basic outcomes?

What’s the plan to balance power and flexible with ease of use and speed?

Some Blender users still find working with the shader node editor a fuss, and it was only after adding the principled shader that materials started to feel quick and easy to work with again, to make it possible to achieve simple results quickly while retaining the ability to achieve complex results powerfully.

Just to be clear, these are genuine questions and concerns and I am still totally on board with the idea of ‘everything nodes’, but I’m just wondering what the plan is to deal with what feels like could (emphasis on ‘could’, I don’t know!) become an issue of conflicting priorities of balancing those two desires with the system?

Should something like the existing systems continue to exist and the ‘everything nodes’ system be added on top as a kind of super powerful simulation system for generating more complex results?

Or will it still be possible to achieve quick simple results with the everything nodes system?

Will we need some kind of ‘principled shader’ node for particle systems that has a few common inputs/outputs/variables for quickly achieving basic particle systems, with just more options for more complex results?

Now since the particle nodes update it should be possible but not with collections, but it will be linked with a modifier that reffers to one node group or particle simulation output

for simplicity i guess they should have some quick effects like that in houdini or a way to save presets

and when the asset manager arrives maybe the presets could be “assets”

where you could just drop a simulation on an object and viola!! maybe something like the quick smoke ,quick liquid

thank u

If I could give you some advice it would be to consider the meaning of the wires and how abstract/concrete they are. This should inform the design of the node network. I’ve used systems from along the entire spectrum and the more concrete ones are always much easier to use, understand, interpret and debug from a user’s perspective. It may be tempting to see these as small, arbitrary design choices but in my experience it really makes all the difference.

What I mean by that is graphs that are more abstract use wires to represent more generalised abstract relationships. This could mean a kind of callback or function like you have here, or a nebulous concept of data without being specific about what the data actually is.

On the other hand in some systems a wire represents (and only represents) a conduit for data that’s consistent in structure throughout the entire network. With these systems it’s much much easier for the user to know what is going on, especially when you have tools to be able to drop in and inspect and visualise the contents of those wires at any point in the network. It’s also a much simpler mental construct - rather than ‘anything goes’, you always know that the same structure of data flows along the wires, and nodes ingest that data, do something to that data, then output a modified version of that data that flows down the wire to the next node.

In the context of dynamics you could interpret that as the nodes attaching data to geometry that represents the forces acting upon it, records of past interactions, or references to other objects like colliders. Then at the end all that data flows into the solver, where the solver then modifies other aspects of that bundle of data (positions, velocities) and updates for the next timestep.

This could mean a kind of callback or function like you have here, or a nebulous concept of data without being specific about what the data actually is.

This! 100%!

On the concept of giving concrete meaning to nodes, I am kind of worried about the fact that “influencers” are singular nodes that encase rather abstract yet specific concepts… Like what is this “gravity” node in the design document? Is it just some scalar parameter you plug into the solver node and it infers context and treats it as a parameter or is it a callback that applies procedures on to a generic set of data?

It sounds straight forward “gravity” abstracts to moving things down on an assumed infinite plane… Well what if we are simulating an asteroid field or orbiting satellites around a donut planet or space station? You are no longer working on the assumption the previous design has given… Now the gravitational model needs to be provided by the user. How do influencers accommodate this? Could you say take this “Brownian Motion” node and apply it’s internal logic to something like the points on a cloth/fluid sim or is it just an inferred component of blender’s existing mental model of what a particle system is, just laid out as nodes? If they are just parameters then influencers should just be socket inputs.

Influences can add points, remove points, or modify point attributes of pointcloud geometry. For a gravity node and other force nodes, that means writing to a point attribute named Force, which is created and set to zero at the start of every time step, after which influences are executed.

When can we expect the results of the workshop to be implemented?

Can this design be exposed on the blog to get broader and further feedback ?

Only a very few people are aware of this thread and you may miss some needed feedback.

Also it looks that no very senior FXs TDs were consulted at any point in the decisions, and that is quite a shame, because they know better than anybody how thing should work.

I am no expert in FXs but it feels this design is not agnostic enough.

Influencer is quite vague concept to me.

Good point but there is also a question when the right time is that feedback from external people makes sense