The xr-actions branch now has default actions, since they were requested in the patch review.

However, these are not present in the old 2.92 experimental build so for now you will need to build the branch from source to use them.

The xr-actions branch now has default actions, since they were requested in the patch review.

However, these are not present in the old 2.92 experimental build so for now you will need to build the branch from source to use them.

Hi! Muxed-reallity Thanks a lot for the effort! I´m super excited about this addon. I have tried with the compiled version, setting up some simple actions and I’m super hyped with the possibilities  . For this reason I have compiled the xr-actions-D9124 branch and the procedure finishes ok and blender runs perfectly, but i can’t see the default actions

. For this reason I have compiled the xr-actions-D9124 branch and the procedure finishes ok and blender runs perfectly, but i can’t see the default actions  I’m pretty sure that I’m making something wrong, but I don’t know what could be.

I’m pretty sure that I’m making something wrong, but I don’t know what could be.

.- For building the version, the correct procedure is making a checkout to xr-actions-D9124 branch and “make full” isn’t it?

.- Is it necessary to make something more inside blender?

Thanks a lot for any help

Hi @Santox, very sorry for the late reply. Didn’t notice your message until now.

If you are building from source, in addition to checking out the xr-actions-D9124 branch of blender, you also need to check out the xr-actions-D9124 branch of the addons submodule.

Alternatively, there is now an updated build for 2.93 here: https://builder.blender.org/download/branches/

Also, here are a few steps to get started:

Hi Muxed Reality! Thnxx a lot for the answer. Yes, some weeks ago I found the xr-actions new compilated 2.93 version and I downloaded it. I have played only a little with it, but I’m absolutelly amazed with the posibilities. Currently I have not to much time, but I thinlk in a couple of weeks I will have more time and my intention is to check that version deeply. Thank you very much for your work. I will be back for here to tell things…

Greets!

I just tested the latest build of the XR-ACtions branch.

I’m impressed on how well it goes!

I used it with an Oculus Quest 2 with Oculus Link (with cable) at 120Hz and it’s a pleasure!

I miss to have at least the tools at hand, and at the very very least to be able to enter into modes and use the selected tool, to do some basic sculpting, that seems to not be possible yet, am I right?

Anyways, WELL DONE!

Thanks! Glad that you enjoy it

Yes you’re correct that using the tools and modes other than object mode is not currently supported. The focus for the time being is scene navigation, with some basic object selection/transform for scene arrangement applications.

However, once all this is (hopefully) incorporated into master, the next milestone for the Virtual Reality project will be supporting the paint/sculpt/draw tools, at least at a basic level.

That’s awesome

Thanks!

Hi

@muxed-reality

and other VR Blender enthusiasts.

I am using the actual XR Dev branch build to prototyp a VR Collab session.

It s all about the simplest possible navigation for our users.

Therefore i placed

The landmarks are populated with avatars who are sitting inside and standing around the car to have an vsiual represention and an raycast target…

With the Vive controllers the user can now mark the avatar and gets teleported to the standard positions. Around the car and inside the car.

This works great and is the most easy navigation for first time users.

It also delivers standard positions to teleport for discussing the car desig from predefined locations.

Question 1:

All the Avatars are inside one collection and i need to make them only visible by pulling the vive trigger.

pull trigger: collection with avatars is set visible

release trigger: teleport to selected Landmark Avatar, set collection with avatars invisible

Do you have probably an minimal script example how i could set this visibility to an collection via an vive pull trigger with index finger on controller action.

Tried hard.

Question 2:

I populated with the motion tracking simple suzannes to both hands and the head.

So that the user is visible in the scene.

Then i use Ubisoft mixer to connect to Blender sessions.

Starting VR on both scenes.

Now the Avatars from the connected user via Mixer (suzannes on hands and head position) should be visible and move, so that you see where your collab partner is placed in scene and to discuss the interior and exterior design. Only the hand visualisation is here very helpful to see what the other user is pointing at.

This is the most easy VR Collab mode. See where your connected partner is and where he is pointing with his hands. Speech is delivered via MS Teams or Skype seesion.

It´s something like this planed from your side to support by standard?

Ubisoft Mixer is currently not maintained anymore.

I just searching a maintained connection implementation with this minimal sync of hand and head position.

Any tips how to get this would be great.

Thanks.

Hi, thanks for trying out the XR Dev branch.

Here’s a minimal operator for setting collection visibility:

import bpy

class ShowCollectionOperator(bpy.types.Operator):

bl_idname = "scene.collection_show"

bl_label = "Show (or Hide) Collection"

name: bpy.props.StringProperty(

name="Collection Name",

default=""

)

show: bpy.props.BoolProperty(

name="Show",

default=True

)

def execute(self, context):

col = bpy.data.collections[self.name]

if not col:

return {'CANCELLED'}

col.hide_viewport = not self.show

return {'FINISHED'}

bpy.utils.register_class(ShowCollectionOperator)

After running this in the script editor, load the default VR action maps, then copy the teleport action. For the new action, replace the operator with scene.collection_show and change a few more properties as shown below:

Copy this new action (teleport_show_collection) and repeat for the hide collection case:

Note: The names of the new actions are intentional, since collection hiding needs to happen after the teleport raycast. To ensure this, just prefix the new names with the action they should follow (in this case teleport).

In response to your second question, there currently aren’t any plans to support networked VR collaboration in Blender natively. However, if any developer would like to contribute, it would be more than welcome.

Thanks a lot for this detailed help and the code snippet.

I will upload the project with the Avatar based teleport to GitHub next days.

I will also try to sync the controllers and head pos per Blender session via

Multiuser from Swann Martinez

I use the EEVEE Racer scene from katarn66 and integrated some controllers and a headset as Blender Assets with CC Attribution.

Here a little simulated preview how the VR collab should look when finished.

The green user sees the yellow user (head position and booth hand positions) in Blender 1.

The yellow user sees the green user (head position and booth hand positions) in Blender 2.

Hi,

@slumber

with the new motion tracking feature i am able to move the head position and hands in a vr session now.

I also did some inital tests to sync two or more users.

Could you give me some tips what would be the easiest and most stabil way to sync

the Head and both Hand positions with your Multisuser.

Here the simulation to illustrate what would be needed…

Blender 1 (yellow): head/ hand left / hand right

master

Blender 2 (green): head/ hand left / hand right

joined the session

Only the pos of head/ hand left / hand right should be synced.

All other Multiuser features could be deativated.

In the actual MultiUser implementation there is only the camera wireframe synced.

I set delay to 0. The synced movement speed would be fast enough.

Is there a easy way to add my pos of head/ hand left / hand right to the sync?

Hi !

Thank you very much for exploring collaborative VR in Blender and for your feedback on the initial tests !

The multi-user python API provide a function update_user_metadata(repository, the_custom_data_dictionnary) to sync any user-related metadata (code example).

Once published by a client, these data can then be read on each client to from the field session.online_users[usernames].medatata[your data fields](code example)

Since the multi-user hasn’t a dev doc yet, I can help you to implement it, can you give me precisely the data fields to synchronize ? (Does the viewer_pose_location, viewer_pose_rotation, controller_location and controller_location attributes should be enough ? )

This could be part of an experimental branch of the multi-user

Thanks for the fast answer. Testing your MultiUser is pure fun.

I will provide a clean example blend on GitHub like the Screeshot above,

The vive controllers and the suzanne head are already moving through the motion track feature after you started VR.

my plan:

There is a collection: VR User with the meshes

VR_USER

only the pos/rot of this 3 meshes per user should be in sync.

Every joined user has an instance of this collection VR_User who is in sync with the other collab members.

So everyone is seeing where the other joined users are and what they show with their hands.

Thats only my first idea, probably you have an prefered simpler method?

Speech is delivered over parallel running MS Teams or skype session. We use it daily.

So with this you could already have a full VR review of the scene.

You see where the joined users are, what there looking at and what they show with their hands.

The speech allows full communication and discussion.

My first focus is to have an outstanding stabillity in this VR collab session.

Therefore this reducement to this min needed communication concept.

This could be extended then through live vr collab modeling through other XR Action functions (edit mode/ pick vertices with controller raycast/ move). The devs here do great stuff to finally make this possible.

But first i would like to focus on absolut stability and ease of use.

also to think about (optional):

The most easy setup could also be that every user who will join the session must have the file loaded (screenshot) from shared folder.

Only the pos head and hand are then in sync with MultiUser.

This would be an alternative method who fullfills highest data security policies for collab sessions through firewalls where it is not allowed to share geo data over connection.

Only the interaction data is transfered.

But this would be only a stronger simplification of that what you have already done.

Hi,

there is some progress on the example scene.

Yellow

the vive headset and controller asset are in the the

Multi_User_Avatar Collection (also see in the

Outliner)

There are driven trough Motion Capture

path drives object/empty

/user/head - > user_head

/user/hand/left - > user_hand_left

/user/hand/right - > user_hand_right

After starting VR the Susanne Headset and the controllers are moved.

Could you give some help how to get them in sync per user who joins the Multi User session?

I´am using the Branch build XR-DEV from

You have to enable the VR Scene Inspection addon.

other functionality.

Thanks to @muxed-reality

RED

The Landmark Avatar Collection is nicely put to show when you pull the trigger.

Than select the avatar and release controller index finger button to teleport to this position. Release also is hiding the avatar positions.

The current file

all credits for the Racer scene to

katarn66

Only added some new irradiance volumes, higher res IBL, changed the scene structure a little and added some eevee/cycles material switches to allow a more seamless switch from eevee to cycles.

@sasa42 Nice setup!

@slumber The multi-user data fields to synchronize would be the object transforms for XrSessionSettings.mocap_objects collection property (which is only present in the XR Dev branch now).

I’m not familiar with how the multi-user sync works, but accessing the fields would look something like this:

session_settings = bpy.context.window_manager.xr_session_settings

for mocap_ob in session_settings.mocap_objects:

if mocap_ob.object:

# Sync object location/rotation

# mocap_ob.object.location

# mocap_ob.object.rotation_euler

Thanks for your help!

Thanks. Trying hardly to find the most sustainable way in a motivational environment/example setup.

Without your help hardly possible.

Next week i will correct the pivots from the gltf controllers and headset. Also try to make the index finger button and thumb pad visual moveable to have some visual feedback in scene.

@slumber has given me already some help for making the multi user repräsentation. But i need some time to get into.

Probably he has some quick hacks/tips to make it happen faster/ the right way by reviewing/trying the example file i uploaded to GoogleDrive. (2 posts before)

Proposal to speed up XR Action/VR development in scene and make it accessable for dev even if you have no headset or controllers attached.

Probably you already use/have something like this already.

But per default you cannot start a VR Session without headset attached.

Therefore i found no way.

VR simulation/XR Action debug mode:

(integrated as checkbox under Start VR session?)

This would greatly accelerate VR/XR action development speed in scene because you can full test and develop in the VR scene without headset and controllers attached.

sorry for the delay, I had trouble running my occulus quest on linux to test the scene inspection ^^

but it works now and I’ll be able to explore different way to replicate the information needed to represent the user in VR (thanks for giving the python properties @muxed-reality and thx for the test file and details @sasa42 ! )

I made a dedicated issue on the multiuser gitlab to track easily the progress about the topic

I’ll keep you informed of my progress and as soon as I have a partially working version of the multiuser to test I’ll post it here

Thats great.

I jumped also into your Discord.

The scene is currently optimized and set up for the HTC Vive /HTC Vive Pro/ Varjo XR-3 on roomscaled tracking. Therefore the one click move to Avatar/Landmark positions via controller concept.

But i will try an Occulus Quest too next week.

Minor test scene optimization will follow next week.

But the main structure will not change.

To run different render quality and speeds you can select all car objects over the car collection or under the car empty in the outliner and

hit STRG + 1 (Subdivision Level 1) in object mode.

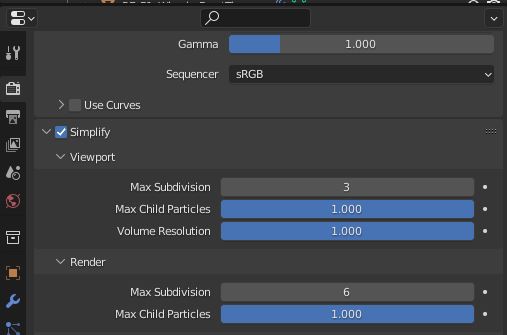

Or use the Max Subdivision on 1 in Simplify/Viewport.

At the moment there are set individually to display round what´s round (1 to 3) and max to 3. Optimized for an RTX 2080 card.

I will also add an minimal car rig. So a collaborative VR TestDrive with 3 users in one seater car for a few meters is pretty close.)

A box stop where 2 other users change wheels or go to edit mode and change tire width also.

Not because it would be needed, more because we can.)