The current build times and size of kernels are already problematic, we’re not likely to double that for one feature, Additionally, 8 wavelengths add significant memory overhead to the GPU, and require more memory bandwidth. So before we consider this, it should be shown to decrease noise enough on production scenes, to make up for increased render times and memory usage.

I think 4 wavelengths has been shown to provide reasonable noise performance with the memory impact being much less significant, so unless there is much better noise performance with 8 in the majority in scenes (there will always be edge cases where 8 performs much better) I think 4 would be a better default choice.

Why keep rgb and spectral side by side then? why not just scrap rgb when spectral is obviously the way forward? Is it too early to consider this? sorry if this has been answered before.

From what I have seen in previous spectral branches, 8 wavelengths would give an almost color-noise-free render right from the start, 4 wavelengths seems to be faster but with some color noise present. Can this be a runtime setting with default set to 4?

Some people might be doing , say NPR etc., and thinking spectral to be overkill. I guess it depends on how much the community want to keep the RGB renderer. Think about it, replacing the RGB renderer is so much easier than having it side by side, not need to worry about installation size anymore, no need to worry about potential issue with RGB-incompatible spectral nodes anymore, etc. But there are people still want the RGB render, even just for backwards compatibility etc. And there are people don’t agree with the increase installation size on the side-by-side direction as well.

I am personally fine with both direction though, side by side or just spectral. It’s fine for me.

We need to see renders of our benchmark scenes with time/memory statistics, not anecdotal evidence.

We have a benchmark script to compare equal time renders. I’ll check that once there is a patch submitted with actual spectral rendering, and then we can decide from there.

Most of the benchmark scenes afaik are unlikely to benefit significantly from spectral rendering. I suspect the Sprite Fright scene might show some appreciable differences based on the relatively high saturation colors of that though…

I don’t think this necessarily is gonna do much for noise reduction. It’s gonna be better for correct hues in deeper bounces though, avoiding a bias towards the primaries, and allowing the correct handling of deeper saturated colors. It may therefore be more relevant together with recent in-progress additions that are better at capturing relatively complicated deeper paths to get more accurate indirect light…

The concern is not “what good will spectral bring” I believe, but the performance impact to have 8 wavelengths vs 4 wavelengths per ray. If 8 wavelength reduce color noise enough compared with 4 wavelength it might justify its performance overhead.

Right, yeah, that’s fair. I suspect 4 will be the way to go. At least from what I recall in the old builds, color noise really wasn’t that big a deal at either four or eight wavelengths. It was essentially non-existent at 8 and very low at 4. Mostly an issue for really low sampling counts

Looks like @Jebbly’s work on Many Lights may add extra complications for the Spectral branch (although I suspect the Spectral treatment would actually end up simplifying this as the plan already is to weigh by how well we perceive different wavelengths which ought to be exactly the kind of weighting the separate channel consideration is going to want to approximate)

Agreed, it sounds like the sort of thing which might take some time to understand, but could potentially actually be simplified by spectral rendering. Thanks for the heads up.

Here are 96 Pages of Course notes (with 92 references if you want more material😉) for the Spectral Rendering course at this years Siggraph, published as Free Access by ACM.

https://dl.acm.org/doi/10.1145/3532720.3535632

Abstract

Compared to path tracing, spectral rendering is still often considered to be a niche application used mainly to produce optical wave effects like dispersion or diffraction. And while over the last years more and more people started exploring the potential of spectral image synthesis, it is still widely assumed to be only of importance in high-quality offline applications associated with long render times and high visual fidelity.While it is certainly true that describing light interactions in a spectral way is a necessity for predictive rendering, its true potential goes far beyond that. Used correctly, not only will it guarantee colour fidelity, but it will also simplify workflows for all sorts of applications.

Wētā Digital’s renderer Manuka showed that there is a place for a spectral renderer in a production environment and how workflows can be simplified if the whole pipeline adapts. Picking up from the course last year, we want to continue the discussion we started as we firmly believe that spectral data is the future in content production. The authors feel enthusiastic about more people being aware of the advantages that spectral rendering and spectral workflows bring and share the knowledge we gained over many years. The novel workflows emerged during the adaptation of spectral techniques at a number of large companies are introduced to a wide audience including technical directors, artists and researchers. However, while last year’s course concentrated primarily on the algorithmic sides of spectral image synthesis, this year we want to focus on the practical aspects.

We will draw examples from virtual production, digital humans over spectral noise reduction to image grading, therefore showing the usage of spectral data enhancing each and every single part of the image pipeline.

Sampling and Re-Sampling in Spectral Rendering (Jean-Marie Aubry and Jiˇrí

Vorba, 25 minutes)The wavelength dimension of integration in a spectral renderer necessarily increases the

noise of any Monte Carlo technique; it also complicates the implementation of common

noise-reducing sampling techniques. In this course, we will show how the non-uniform

extension of the “hero wavelength” method interacts with multiple importance sampling

and also resampling techniques. This extension is a requirement for spectral guiding which

is a technique to optimize importance sampling of the multiscalar wavelength, based on

initial estimation of the spectral radiance received at a camera pixel (for path tracing) or of

the spectral importance received at a light (for light tracing). It is implemented in Manuka

and currently being tested for production.

If this has anything to do with path guiding, it may indeed be a thing to consider. And the multiple importance part of that is probably also gonna be relevant to the multi light sampling

In the second part, we explore application of ReSTIR (Reservoir-based Spatio-Temporal Importance Resampling) for sampling of direct illumination within spectral renderer. This requires extending the state-of-the-art resampling framework to account for sampling over

wavelengths so that previous samples are reused correctly.

I think reservoir sampling is also relevant to the many lights branch.

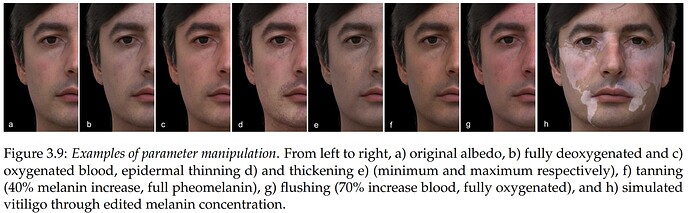

Another really cool thing is the spectral skin model. Having that isn’t something we necessarily need from day 1, but once the basics work, I feel like that could very soon thereafter become a reasonable priority

Like, this is extremely flexible. You end up with five textures that together can be used to calculate the correct spectra per pixel, estimated from RGB values

and you can simply edit them to get the desired effects.

4.1.2 Hero Wavelength Sampling

Since Wilkie et al. [2014], we know how to sample a single (hero) wavelength to drive the

light path, while computing the radiance simultaneously in multiple (hero + supplementary)

wavelengths, without bias. In theory, almost any probability distribution could be used to

sample the hero wavelength; in practice however, only the uniform distribution is used for

rendering. That is because noise performance has been observed to degrade sharply when

moving from single to multiple wavelenghts, except when using the uniform sampling.

The reason for this is the following: according to Wilkie et al. [2014], supplementary

wavelengths are translated (with periodisation over the range Λ of visible wavelenghts) of

the hero wavelength by constant shifts multiples of|Λ| /C, C being the number of channels.

This ensures stratification of the wavelengths across the spectrum, thus much reduced colour noise. Note that if the hero wavelength is sampled uniformly, then so is each supplementary

wavelength.

If one were to sample the hero wavelength with a better importance sampling than uniform, then the supplementary wavelengths would have distributions translated by the same

shifts, which is a sure recipe for inefficient sampling (one of the wavelengths modes is bound

to coincide with a region of low probability for the hero’s importance).

Ok that suggests the naive, simplest version of hero wavelengths isn’t gonna suffice. (Not sure if that’s still what we’re doing though)

If you’re gonna sample based roughly on visual sensitivity per wavelength, rather than uniformly, you’re really gonna want a nonuniform sampling approach.

They are also talking about next event estimation in there, which is gonna be valuable information too, I think

I’m somewhat confused by their implication that importance sampling the hero wavelength results in shifted importance of the remaining (non-hero) wavelengths. The approach we have taken is to first uniformly distribute the hero sample in the [0, 1] range, distribute the remaining samples uniformly around that, still in 0-1, then to map them to wavelengths according to the importance function chosen. This results in the distribution of the hero wavelength and the secondary wavelengths according to the importance function.

I may be misunderstanding the quote, but the approach we have taken so far doesn’t seem to imply “one of the wavelengths modes is bound to coincide with a region of low probability for the hero’s importance”.

Ok

I think the regular Hero Wavelength algorithm as first conceived works by picking a Hero Wavelength and then put a constant offset for the other wavelengths on that, making sure it wraps around. Not another uniform distribution. And that’s when the problem can occur.

If that’s not what you have done, then you gotta be careful that the distribution you chose instead actually satisfies properties such as detailed balance, which are necessary for the sampling to achieve the correct limiting result without bias.

They have a ton of references too. If what they write here (this is also just an excerpt) isn’t clear from all that, then check the references

Did some reading, turns out the bit that was quoted is just referring to a stage in the process of improving spectral importance sampling. This seems to describe the approach we’ve taken so far, though there’s a lot more we could be doing too.

4.2.1 Non-Uniform Wavelength Sampling

In the following, we propose a different way of obtaining a range of supplementary wave-

lenghts, each following the same distribution as the hero wavelength and with similar strati-

fication properties as the uniform translation.

I’m curious to see what the cost and impact of per-pixel spectrum estimation as a pre-process step in the render might be. I expect it would greatly improve noise performance for highly saturated scenes, but would have negligible impact on less saturated/spiky illumination/reflection spectra.

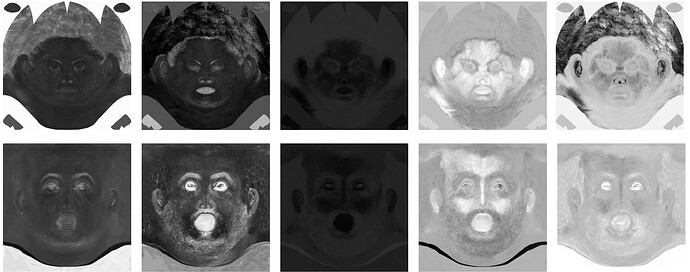

EDIT: Like this ![]()

4.2.4 Histogram Estimation

To estimate popt in (4.12), we gather for each pixel a number of samples of that quantity. Fol-

lowing van de Ruit and Eisemann [2021], we apply a simple bilateral filtering while splatting

and accumulate those splatting weights in a fixed-bins histograms (one per pixel). In test

renders of natural scenes, where the wavelength distribution tends to be naturally smooth,

a relatively low resolution for that histogram was found to be quite sufficient, with the ad-

vantage of sparing memory and obtaining a robust estimator of the distribution even with

relatively few samples (the initial wavelengths must be sampled using a fixed wavelength

distribution, for instance uniform).

yeah there is a lot of good stuff in there to consider. First getting the basic version to work is sure to be useful. I’m hoping we’ll get another version we can test soonish again.

But perhaps, if you can roughly gleam what it might take to do any of those other approaches, it might be good to structure the code with those possibilities in mind

Hi guys, I have been reading this chat for some time and I thought it is time to give back to the community. I have completed a master’s thesis and did spectral ray tracing with Blender python API. It includes a whole imaging pipeline but main usage was camera algorithm training data creation. Please reach to it from here: Spectral Ray Tracing for Generation of Spatial Color Constancy Training Data - Trepo

It includes a comparision between the real life image and spectrally created one under 6 different light sources. I am open to feedback and talks about it!

Have a great day!

However, it is experimented that even these small contributions affect physical realism. Therefore these settings such as “Noise Threshold”, “Light Threshold”, “Clamping”,

and “Caustics” are turned off.

You turned off caustics? Blender up until very very recently (and certainly at the time of the spectral branch) couldn’t handle caustics well by any means, but turning those off would definitionally decrease realism, no?

The others I understand though

Only indirect clamping is turned on in order to avoid the fireflies effect.

Again, fully understand given the features to “properly” deal with this are so recent (literally still in experimental phase), but this definitely is not an ideal choice in principle, if realism is the goal

I can’t wait for a Spectral build that works alongside shiny new features such as Path Guiding and Many Light Sampling. Both of those should dramatically reduce variance and make all those cheats far less necessary for good results.

Perhaps retrying some of these experiments once a new spectral build is out would be a good idea

For example, if the aim is to create a sky spectral texture, an RGB

sky texture image can be used as a reference. White cloud pixels can be replaced with

cloud spectral reflectance, and blue sky pixels can be replaced with blue sky spectral

reflectance. The new spectral texture image size is width × height × 63 and can be

saved as 21 3-channel images, each with 3 channels of spectral reflectance from 395nm

44

until 705nm.

It would be kinda amazing to have some semi-automated tool for that, where you can just magic-wand-style select areas and assign materials they are supposed to be and out comes an appropriately spectrally upsampled image.

Spectral ray tracing is a computationally expensive process, depending on the number of

rays traced for each pixel and the number of total meshes in the scene, rendering time

varies.

As far as I know, mostly the raytracing itself should be the expensive bit, not really the spectral part. There is some overhead, but it shouldn’t be enormous. Although I can’t recall how big a deal it was in the latest builds before the rewrites began.

Anyway, very fun application! I like the idea of adapting whitepoints “locally” per pixel.

Just clarifying 1 point, I did not use Cycles X Spectral Rendering build, but rather used normal Blender 3.0, did the spectral ray tracing with the help of python API.

I think you may be right, I am not advanced in Blender and design, I have tried to learn as much about the “ray tracing” as possible and since there are many hyperparameters in Blender, my idea was to give same float spectral value to the 3 color channel and check the output image histogram if channels are same. It gave me the idea that Blender added nothing to the ray tracing calculation for different color channels. Now that I try again, I may have missed that cauistics also did not change the histogram. I will see the effect of it on the realism of images and let you guys know. Thank you, very much appreciated.

There is another paper which I took also a bit of base idea into my thesis here; https://onlinelibrary.wiley.com/doi/abs/10.1002/col.22535 . In their system, there is an automatic matching algorithm from RGB to spectra. But when I tried it for Color Checker image, it was performing poorly. My main aim was not to automate things just so that I loose a bit of realism. Since creating the image once was enough, realism was more important.

I did not use or implement hero wavelength spectral sampling, therefore calculation repeated 21 times to cover whole spectrum rathen 1 time if you would be doing rendering in RGB. I meant there that its computationally expensive compared to normal RGB rendering but you are right, I should have written that way, that it is expensive comparibly.

Thanks a lot! ![]() appreciate the feedback!

appreciate the feedback!

Oh, you rendered out 21 images, ok, that would be rather slow haha

Then you already potentially had access to the latest features, depending on how recently you rendered out stuff and what builds you used. I’m guessing it actually was a while ago though, since writing a work like this takes time.

If you got access to the resources, maybe retry with a Many Lights branch experimental build and see what that does. Also try Path Guiding. Note: Path Guiding is CPU only as of now. However, it takes many fewer samples to arrive at a low noise images, than regular path tracing, meaning despite CPU rendering taking longer than GPU rendering per sample, the sample quality is so much bettter that sometimes you actually get faster results with CPU overall. Eventually it’l also be available on GPU though.

Also note, that method of doing spectral rendering in many but finitely many channels is biased, since it’s missing out on the continuous nature of spectra. Hero Wavelength sampling promises to be unbiased, converging towards the actual result you’d expect in real life. Probably not that big a deal at 63 channels though. Just something to look out for.

Caustics are an effect of indirect light. They will add extra light to your renders and therefore necessarily shift colors a bit. But that’s realistic!

As light bounces through a scene, objects absorb some of the light, and reflect away the rest. This bounce light tints the light and, on repeat bounces, that causes a shift in saturation and hue.

Actual photos would also have this effect happen.

That said, Blender doesn’t do them well if you don’t use Path Guiding. Mostly they generate fireflies without it. But simply disabling them will lose you light. Energy is not conserved. The end result will be slightly darker overall.

Oh and if you enable caustics and expect realism, you also need to reduce Filter Glossy. Ideally to 0, but that’s too hard on Blender. It makes paths very difficult to find. Keeping it at like 0.1 will be a decent compromise.

There is also Manifold Next Event Estimation for better caustics, however they have limitations (shadow caustics only) and don’t as yet play well with Path Guiding, so their usefulness is currently limited. If you want to give them a try you gotta enable the Shadow Caustics checks on all relevant light sources and objects. Most likely they won’t amount to much other than slowing down your renders as of right now though. I suspect once Path Guiding plays properly with Shadow Caustics, this will improve significantly.