I feel like it’s going to be necessary to have a working implementation of spectral rendering first before the rest of the pipeline could be expanded to actually consider its features in full, so even just as an “invisible” implementation it should work rather well.

At the very least blackbody and coherent wavelength emitters should both be usefully adaptable, right? Those two would be a nice start.

Definitely open to that! I have a very limited understanding of the subject though, it’s just that @troy_s told me that Rec.2020 is probably the largest colour space we can render to before we need to render spectrally, and with OCIO allowing us to render to whatever space we want, I strongly believe Cycles shouldn’t produce broken results by adhering to an outdated rendering model. Also, I believe I read somewhere that it is one of the only engines who have yet to make the move to spectral anyway.

I have no idea if it being 2.79-based would be a problem for the devs, but I would love to see something about it on the tracker. A diff is in that regard the best thing to start from. Can it be rebased?

To me this looks very much like a lot of things need to happen at the same time. Let’s look at it backwards: many users and studios want to be able to produce wide gamut / HDR content. For that, rendering to Rec. 2020 is essential, which would benefit from spectral rendering. For wide gamut to work, decent gamut mapping in the view is essential. @troy_s has expressed interest in designing a new Filmic config to support all of this.

That invisible implementation sounds great to me, at least for now. While users aren’t necessarily going to be wow-ed by it, it would provide us all with at least the means to generate content that holds up in wide gamut pipelines. That in itself would wow people ![]()

That would be news to me, I haven’t looked into it a whole lot and I can’t find a whole lot on the topic, but different engines all seem to handle it differently and few of the ones I looked at handle colour and materials spectrally.

I doubt it. It wasn’t written according to their code style and there is likely a few embedded misconceptions in the code that I wrote. Happy to add something but I don’t think it would be a good starting place to work from, other than just as reference.

Regarding what needs to happen, I agree, an invisible implementation would allow the rest of the colour management pipeline to be handled correctly, then if/when more spectrally dependent material options become available, they will fit naturally into the existing system.

One thing I would like to highlight is that the rendering engine internally using spectra to generate the output has distinct and separate benefits from having that output managed correctly through the rest of the system.

Using spectra within the rendering engine means the render is more physically accurate - colours fall off correctly, materials shift their apparent colour based on the illuminant, etc. The output of this is just an XYZ triplet for each pixel which accurately represents the illumination of that pixel in the scene.

The next step is to handle that XYZ colour data in a correct and intuitive way such that we can composite intuitively and output to any colour space with the most accurate colour possible. The changes needed here is where the gamut mapping and Troy’s work with Filmic will come into play.

If all we’re after at this stage is correct handling of colour, maybe just working with the existing RGB engine and getting it to handle a larger colour space (REC.2020 seems like the biggest space which makes sense) might be sufficient, but of course I’m not against the idea of having it handle spectra properly in the engine either.

Although there’s very little information on it, Weta Digital’s Manuka engine is a real A+ student when it comes to spectral rendering - If we want something to aim towards, I’d say that is it.

I think it would be a bit silly to do that, when spectral rendering (among other changes) would just permanently future-proof Cycles and Blender for this. Presumably, once this is done properly, it would be fairly easy to extend to what ever color spaces one might want to use.

This is true - I just wanted to point out that correct colour management doesn’t necessarily rely on a spectral rendering engine, and just having a spectral rendering engine won’t automatically make the colour management any better.

Oh, that is very well possible. All I can refer to is Wikipedia, which mentions Arion, FluidRay, Indigo, Lux, Mental Ray, Mitsuba, Octane, Spectral Studio, Thea, Ocean, ART, and Manuka. I must say that list looked longer and more relevant the first time I saw it.

Sounds already like a good enough reason for it to be implemented!

Colour management is being looked into, regardless of spectral rendering. Rendering to Rec. 2020 is already possible, but there are many caveats.

It’s just that spectral rendering plays a huge part in handling colours correctly too. I’ve been told it’s even necessary for spaces beyond Rec. 2020, and it’s physically correct rather than approximated. Which to me makes it important enough to implement, alongside a correct pixel pipeline.

XYZ suffers the same problems that any three-light system does with imaginary or too-wide primaries. The XYZ would need to be downgraded to BT.2020 or some space with primaries that are reasonably luminous for a standard three light model.

The only way to work around the inherent problems of three light systems is to migrate to spectral.

One of the more difficult problems with three light systems is the luminance of the chosen primaries. The wider the red and blue primaries move along the spectral locus, the less luminous they are to our perceptual systems. That is, at equal radiant energy, the colours for the blueish and reddish primaries will become darker and darker.

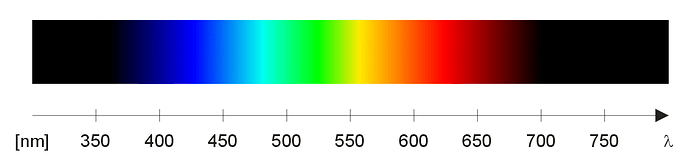

See the dark regions of this sRGB image on the left and the right near the 350 and 750 nanometers ranges? While those are entirely valid visible light spectra, they don’t even look like a colour in the above sRGB representation. The colours represented there are extremely saturated, but because they are such low perceptual luminance at equivalent radiant energy, they end up near no visible emission, which is made much worse in the down gamut rendering of sRGB.

So why is this a problem for three light systems? The issue is that indirect lighting is essentially a multiply; the incoming light is multiplied against the reflective response of the albedo. So why is this an issue?

Imagine a very wide blueish spectral primary somewhere in that legitimate 350nm to 400nm range from the image above, in a three light system. It’s so wide, that the effective blueish luminance is extremely dark, perhaps 1%. As we multiply the incoming light, the primaries become more saturated by reducing the quantities of light in the reddish and greenish channel diminish.

The net result is that the resulting colours from typical mixtures of the three lights become muted and dark, and leads to “flatter” looking imagery. That is, despite being potentially significantly wider gamut, the results of the physics models yield imagery that is less than compelling. The three light model has significant limitations.

Using a spectral based model divides up the system into more than three lights, and as a result, the colours don’t smash darker in quite the same manner, leaving more luminance left on the table.

Explained it perfectly, as normal. Thanks @troy_s. Certainly, when simulating light, doing so spectrally is the only way to get meaningful results, but once a spectrum for each pixel is obtained, any sufficiently large three-light space should be enough to store the output.

On a side note, thinking of all the ways in which a spectral renderer makes our lives as artists easier and simpler makes me really excited - the way colours become muted as you go further underwater (due to extinction of many wavelengths), thin-film and dispersion effects out-of-the-box, add to that a proper pixel pipeline and you have something really powerful.

I was wondering, with XYZ being input to the compositor, would the user get any control over the camera sensor sensitivity curves (or eye sensitivity for the research-inclined user)? Or is it perhaps part of the OCIO configuration that should be set prior to hitting F12 for render? What happens to all the beautiful spectral data once XYZ has been computed; can it be pulled into the compositor in some other manner (maybe via a “Spectral-input node”), or is it discarded once Cycles has done its magic?

There is a substantial memory overhead in retaining all the spectral data but it should at least be configurable prior to rendering - it’d be great to have standard observer, a few colour blindness response curves, and some camera-matching ones. This could be done with no additional performance or memory cost compared to only supporting the standard observer response curve.

arbitrary n-channel rendering would be neat. As yet very niche - 5 color screens and the like aren’t exactly mainstream - but certainly neat.

Doing renders of various profiles (color blindness or camera profiles) would be nice as well. Especially if you could basically batch them together. Render once, get an image for each profile you want.

I think that would be possible without storing all spectral data. Just gotta do the step where the spectrum is converted to color multiple times, right? - Slower than doing it once, much faster than re-rendering several times.

Getting rid of that bounce light problem seem rather important though. Is this already present in plain old sRGB or Rec. 2020? It looks like between roughly 420 and 640 nm we’d be fine but anything beyond starts dimming significantly.

And the ability to eventually have physically based materials (“real” metals and the like) would be brilliant as well.

Yeah, I’ve played with IR/RGB/UV images before, they were fun. From spectral data you can go to as many channels as you want, just a matter of defining them.

Right. I might play with this concept in my other renderer. ![]()

Yes, any non spectral engine faces this issue to some degree.

I made some gold in the early stages of this and it just looked ‘right’ all the time. I was very happy with that.

@kram1032: note that each camera/observer profile adds 3 image planes (r, g, b) or (CIEX, Y, Z) and they would share a common alpha-plane. When you select more than (say) 5 camera/observer profiles you already have 15+1 channels to keep in memory, at which point it starts to make sense to do the spectral-to-rgb conversion in the compositor. On a side note: the spectral-2-XYZ conversion is a fairly cheap operation on both the CPU and GPU, so it is mostly about memory allocation. I’d say that a user should be able to select one out of a few camera/observer profiles or the spectral profile. When the user selects the spectral profile, the compositor shall offer nodes for spectral-2-XYZ and XYZ-2-RGB conversions.

I took a quick look at the numbers. A naieve approach (storing the wavelength and intensity of every sample, assuming 32bits for each) with a full HD image with 1000 samples is just over 8GB of memory. If many output channels are needed maybe some sort of intermediate representation is needed. You’ll always lose data in such a compression though… Or maybe I’m missing something obvious

@smilebags: to state the obvious (I have not seen nor tested your current spectral renderer yet): The renderer could weigh each spectral sample with the camera-sensor profile and accumulate it into a final XYZ output buffer. It could also accumulate in a banded spectral output buffer having (say) 22+1 bands 360, 400, … 780 nm. It could also (like you suggested) not accumulate and keep the individual samples until the compositor gets to convert into XYZ and RGB. I have done something similar to your suggestion in the past and it is akin to (light-field) rendering of the plenoptic field (assuming you also retain the ray’s direction onto the imaging plane). It works fine and you can indeed do post-rendering focus and filtering and such but it is slow (the sample-to-RGB task is mostly data-transfer-bandwidth-limited). Just for fun, my renderer also memorized the object ID of the first hit and the ID of the light source, so filtering also allowed excluding parts of the scene from the RGB output and applying a coded aperture - now that is some real cryptomatte!

I dismissed the spectral binning approach in the early phases but it might make sense as an intermediate representation for multiple channel output. It takes the memory footprint down by 1 to 2 orders of magnitude depending on the sample count and bin count. Anyway, this is far beyond the initial scope of ‘let’s render things correctly’.

Every such banding would definitely lose quality. Although probably to a negligible extent. FWIW the CIE spectral data stuff covers, at 1nm steps, a range of 390-830 nm so I’d expect spectral rendering, if you don’t intend to go UV or IR (which once again would be rather fringe), to at least cover that range. Might get away with, say, broader bins for the fringes though, since so little light (in terms of how intense it appears to our eyes) falls into them.

But,

I agree with that. I think it would be good to build a system like that with such features as future possibilities in mind, but it’s secondary.

Agree. We could talk for hours on band specifications. The 360-780 is just a configuration that the industrial paint community adheres to (more or less). I can imagine that some university-based user would be highly interested in specifying custom bands in (say) UV or IR. For sure though, a 1 nm bandwidth at HD resolutions for the full human-visible spectral range will eat some seriously amount of memory. Let’s first build a MVP  .

.

This isn’t a weighting. It’s a custom set of spectral responses per camera / lens etc. combination. IIRC this is unsurprisingly what WETA does with Manuka to match their CGI to camera footage plates.

Wiser to base around the CMFS ranges, as those are the Rosetta Stone for all colour.