that’s precisely the kind of stuff I was hinting at with “future benefits”

Thanks for the answers! Can’t wait to play with it when it becomes easy to use

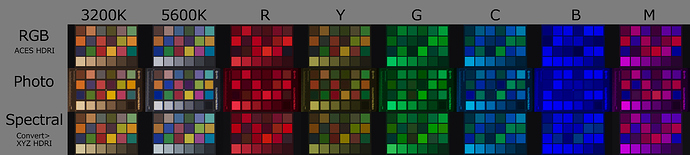

I tried testing using RGB LED lights and high quality HDRI that retained the high color gamut information created and shot from them.

The color checker conforms to ISO 17321.

Since Shader hasn’t done a Spectral calculation yet, there seems to be a difference in energy loss? It is easy to see the yellow tiles illuminated with blue.

Of course, I think there are limits to the expression of HDRI.

Would need to know what you have done here. Properly transformed, ACES and the rest will all yield precisely the same output in terms of RGB XYZ results, hence the same RGB. Assuming the charts are reasonable, I believe all of the CC24 values will end up in gamut for BT.709 based primaries.

It is unclear what is happening between the charts.

I do not understand what this means.

The reason for the ACES to XYZ conversion is that there is no LinerACES input in the color config of the Spectral branch. I could have added it to my config, but I converted it beforehand this time because XYZ was prepared instead.

So the two are the same.

High quality HDRI uses pre-measured and calibrated cameras with an average of 1.8 color difference between the rendered chart and the captured chart when created in a sunlight source.

The color gamut is also close to the BT.2020 range held by the camera’s RAW data.

Therefore, the photographic results are valid as a measurement sample and the closer they are to this, the more accurate the results are.

Of course I am aware that Blender’s rendering color space is based on BT.709, and the chart is also based on BT.709.

However, in the spectral measurement value of the light source, the green color of the LED light source is in the color gamut outside of BT.709, but the color matches the color without much deviation.

I don’t want to make any conclusions based on these results because I’m still working on other verifications such as switching to ACES for other renderers and configs.

That’s the simplest path. There’s no magic in ACES, so a simple transform to sRGB / BT.709 primaries is the most direct path assuming it doesn’t result in out of gamut output.

The reason I said “I don’t understand” is that a chart is a reflective target. Under ideal illumination, there is no such thing as an “HDRI” target; its a normalized reflectance that is returned.

Cameras are spectral capture devices that cannot be inverted back to spectra without the spectral sensitivity curves from the camera, which are rarely supplied or available.

As a result, cameras are forcibly fit to RGB. This results in a portion of the data being close-ish to “data”, and a good chunk of the values being complete non-data. A test chart will be transformed against a fit set of camera virtual primaries. It’s less accurate than many folks understand.

If the chart starts spectral, that’s the most useful chart of the whole set, but even then, we end up going to artificial primaries based on the spectral composition as transformed to XYZ via standard observer functions. That means we have lost that awesome spectral goodness until @smilebags grafts in a means to use actual spectra, which is when a spectral renderer can actually shine properly.

ACES is just-another-RGB colourspace, so no magic. When spectra ends up more mainstream, it too will need a do-over.

Thank you. I apologize for the lack of explanation. We are aware of the points you have made and are verifying them. We hope you will understand that this is a simple verification result.

All tests are very useful. Please keep providing what you can. Thanks!

It’s interesting to see which swatches look different between the three images. I’m only eye-balling here so it’s not perfect.

That being said, most of these swatches look really similar to each other per color channel breakdown, no matter what image.

For the most part, RGB and Spectral are in closer mutual agreement than either is with the camera version.

The camera seems to relatively downplay blue a bit compared to either RGB or Spectral rendering.

Turquoise (third row last column) is closer in Spectral than RGB to the camera in the Red mode.

In the green mode, red (third column third row) is spectrally much closer to the camera than RGB is. This pretty much aligns with my five-rooms test.

Also in the green mode, pink (thrid column second row) oddly looks like the camera version is right between the RGB version and the Spectral version.

The cyan mode shows the same as green.

In the blue mode, the most obvious difference is once again red, which looks very dark on camera and even darker on RGB but on spectral it actually comes through with a greenish tinge. This, I think, is simply a result of the upsampled spectra which are crafted to be as wide and, therefore, “boring”, as possible. There’s actually quite a bit of green in the Spectral red and it shows up accordingly.

A similar slight green tinge can be observed in the spectral version of the lime and gold patches (second row, second-to-last and last column respectively)

In magenta mode, the lime and gold patches look ever so slightly more bluish (i.e. less purely red, towards purple) on camera and spectrally than does the RGB version.

Also in magenta mode, the green patch (third row, second column) just generally looks brighter on camera and in Spectral mode than with the RGB render.

Still in magenta, the yellow patch (third line, fourth column) looks much more similar spectrally than in RGB to the camera version.

As for the light temperature takes, I don’t know what light source you used, but if it’s an accurate blackbody radiator at the defined temperatures, then that’s quite different from the spectra in-rendering, as what’s going on there simply amounts to the color of the light being interpreted as RGB and then re-up-sampled as if it were a normal color. Same as with the various “purely colored*” light sources.

I don’t see a whole lot of differences between those two takes. Mostly, the camera seems to like to saturate things much more strongly than Cycles does. And for some reason, the camera version’s grid lines are much lighter than its darkest gray (which looks like it has been used as a black point for callibration?) whereas the opposite is true for the Cycles renders.

* “purley colored” as in, I can only assume, extreme lighting as given by picking R 1 G 0 B 0 for red and so on. Obviously none of these shots feature fully coherent single-pure-wavelength light.

Sidenote.

The simple spectral measurement data of the LED Light(Aputure AL-MC ) used is pasted.

The color was specified by the hue value (0-360°) on the light control app.

-The blue color is not very accurate due to overexposure.

Oh that’s very nice. I’d love this experiment to be repeated once we have proper spectral inputs so we could match those light sources and, ideally, also the swatch spectra accordingly

That’s the thing; without spectral input it is all just reconstructed from chromaticities.

Splitting hairs here and there based on a slight deviation in coordinates.

Spectral lights with spectral input yield much, much more varied results. It is easy to visualize if one thinks about an illuminating source of identical chromaticity, yet with sharp or smooth or mixed composition.

Yep, something good to keep in mind while testing at the moment is that everything is essentially still RGB, so there’s not much to be gained from trying to study different illuminants yet. I might see if I can start working on custom spectra before sorting out the volume stuff, since volumes are turning out to be very difficult to solve.

Of course. However, even so, this test shows at least a few little things. First and foremost, whenever the Spectral and RGB versions are noiceably different, usually the Spectral version is a closer match to the camera than the RGB version, even with the crude estimates we got so far. Imo that is quite encouraging.

Of course there is very limited information that could be extracted beyond that so long as we don’t have that spectral input. But it’s a start regardless.

Very much looking forward to that! Custom spectra would open a slew of new tests we could try.

If you can’t yet get the blackbody node and some sort of narrow wavelength node to work, at least this is gonna make it possible to approximate those nodes with custom inputs. Should be easy enough to “manually” (that is, with the help of some sort of script) build fixed input lists we could try.

Especially interesting might be testing the very hot colors (those corresponding to relatively cold tempeatures such as candle light) - it presumably should make quite a difference if you use such a low-Kelvin color spectrum or the RGB approximation that’s currently going on.

My sole point is that we are ultimately dealing with chromaticities.

No matter what camera, no matter whatever, the data is distilled down to a chromaticity. To that end, we can start at the best available chromaticity which is the known and published chromaticities of the various CC24 or IT8 based charts. Cut out the inaccurate middle person of a camera, or some compilation through indirect process.

From there, the chromaticities are upsampled exactly the same way in every instance, hence starting at chromaticities makes more sense for evaluations here?

Will spectral rendering be available by Dec. 2021?

That’s a very specific question, why do you ask?

Just an exciting idea… look forward to using it action. Sorry to bother you.

Not a bother at all, I definitely hope something will be merged before then! Glad you’re excited about it.