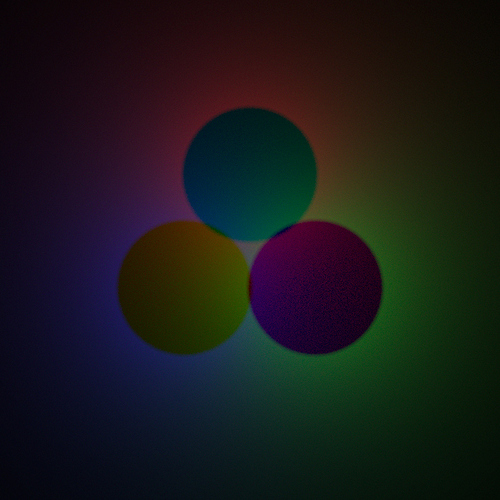

Here is the difference between the two (no contrast boosting, Spectral is darker than RGB everywhere. Interestingly the channels in which it is, however, flip at some point)

The difference is in the radiometric transport. “Darker” isn’t the most ideal way of trying to visualize it as it’s a photometric conversion based on, if done properly, luminous efficacy. Similar problems would be in attempting to compare a 555nm laser projected at R radiometric units falling on a surface next to an infrared composition at the exact same R radiometric energy level; the visible brightness evaluation would fall just as short.

If the spectral components were exposed, you could do a radiometric comparison and see how the two differ on the resulting bounce, but the spectral components aren’t exposed as of now.

Interesting demos nonetheless!

Of course! The render was done as a test to help you with development, not as a bug fix request ![]()

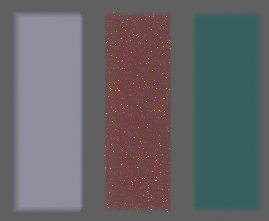

I’m in the process of converting volume to work - absorption is working as expected, both emission and scattering are giving really unpredictable results. I’ve overridden the selection between heterogeneous and homogeneous so that I can get the simple case working first, but I think I must be missing something important.

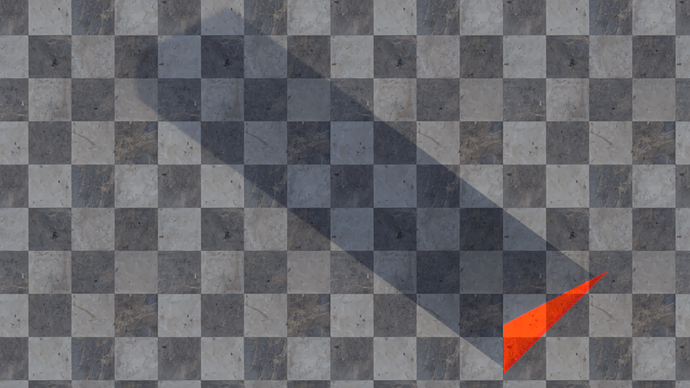

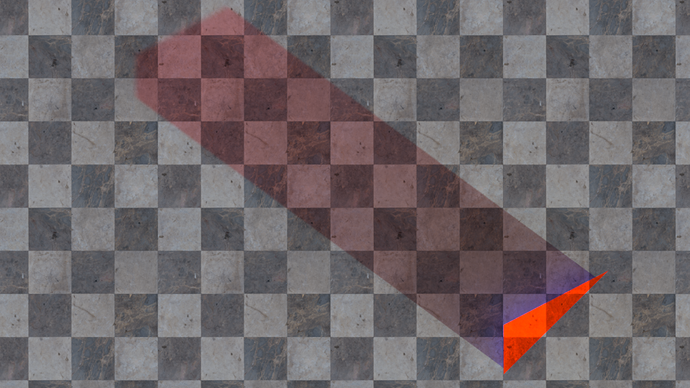

This image shows emission, scattering and absorption of the colour on the right.

For reference here’s the same scene on master:

Can you give me some pointers into how the volume sampling code works? I’m limiting myself to homogeneous volumes, CPU, regular path tracing. I think I’m probably missing a conversion somewhere for emission (it’s picking up some colour but not all of it) and I have no idea what’s happening with scattering. Maybe the fact that ray paths seem to be dependent on the colour is throwing me off.

Extra info if it helps:

I’ve added a few types which don’t functionally change anything but they’re just helpful for me to not get lost in the code:

typedef float3 SpectralColor - SpectralColor holds the wavelength intensity at each of the three wavelengths in state->wavelengths. This will also help to abstract away adding float4 hero wavelength sampling later.

typedef float3 RGBColor - RGBColor represents scene linear RGB float3 variables (such as what ends up in the output buffer)

I’ve declared the types properties of VolumeShaderCoefficients (sigma_t, sigma_s and emission) as SpectralColor and I’m converting to SpectralColor when assigning their initial values in kernel_volume.h volume_shader_sample

coeff->sigma_s = make_float3(0.0f, 0.0f, 0.0f);

coeff->sigma_t = (sd->flag & SD_EXTINCTION)

? rgb_to_wavelength_intensities(sd->closure_transparent_extinction, state->wavelengths)

: make_float3(0.0f, 0.0f, 0.0f);

coeff->emission = (sd->flag & SD_EMISSION)

? rgb_to_wavelength_intensities(sd->closure_emission_background, state->wavelengths)

: make_float3(0.0f, 0.0f, 0.0f);

I’m also converting back to RGBColor just before both occurrences of path_radiance_accum_emission in kernel_volume.h (at around lines 520 and 640) but having to do a little hack since I’m not confident I get the right value if I convert throughput to RGB before multiplying it by the resulting colour from kernel_volume_emission_integrate.

This is an example of me converting back to RGBColour and accumulating at around line 640 in kernel_volume.h

SpectralColor emission = kernel_volume_emission_integrate(&coeff, closure_flag, transmittance, dt);

RGBColor rgb_emission = wavelength_intensities_to_linear(kg, emission, state->wavelengths * tp);

path_radiance_accum_emission(kg, L, state, make_float3(1.0f, 1.0f, 1.0f), rgb_emission);

Are there any other places where light is contributed to PathRadiance in the volume code? If not I guess that isn’t my issue.

I imagine pdf (since it is being derived from VolumeShaderCoefficients extinction and transmittance etc) is causing a lot of my issues since I’ve ignored it so far - I was under the assumption it only affected the sampling weights but that doesn’t seem to be the case here.

If you introduce a proper SpectralColor class, the compiler will likely tell you where you missed the conversion. I don’t really see the point of spending time debugging this until that is in place.

If you need a quick way of getting a SpectralColor that’s incompatible with RGBColor, this should work:

In util_types.h, insert this before #include "util/util_types_float3.h" :

#define float3 SpectralColor

#define make_float3 make_spectral_color

#define print_float3 print_spectral_color

#include "util/util_types_float3.h"

#include "util/util_types_float3_impl.h"

#undef __UTIL_TYPES_FLOAT3_H__

#undef __UTIL_TYPES_FLOAT3_IMPL_H__

#undef float3

#undef make_float3

#undef print_float3

It’s not pretty, but should do the trick.

Is this going to stop regular old float3s from working at all? They still need to exist for things like normals etc, but it would be nice if assigning a float3 to a SpectralColour gave me a warning. I’ll try it out and see what breaks, thanks.

float3 remains as is. This little snippet of macros creates a duplicate of float3 under the name of SpectralColor. When I apply this, I see some errors being thrown when the code tries things like this:

SpectralColor c = make_float3(0.0f, 0.0f, 0.0f);

instead of

SpectralColor c = make_spectral_color(0.0f, 0.0f, 0.0f);

Nice. Thanks for that. I think it’ll help me a lot, i can still explicitly convert from one to the other with the relevant make_* function so this will make the whole thing much more strict.

Even so, making a proper SpectralColor class is gonna be valuable and you probably should get to that sooner rather than later. Everything else is gonna be built on that.

Unfortunately, as I understand it, I can’t use classes in the Cycles kernel. It would be lovely to be able to do so, but it doesn’t seem like it is available. I will define the relevant struct and operator overloads which will make direct assignment from a float3 a compile time error, but doing so means I can’t get a build at all until everything is converted, which is a bit restrictive. That sort of approach has only made me scrap my progress and try again previously.

It actually has the same effect as the snippet Stefan provided, just that I have to redefine all the operators if I am to make the type from scratch.

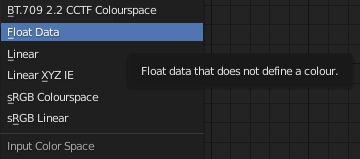

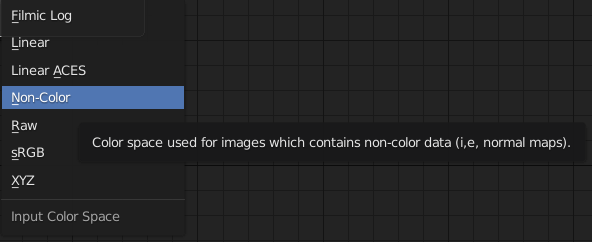

I tried rendering some stuff with textures but ran into a bit of a snag. To really compare them, I’d have to make sure the textures are in the same color space, right?

But I’m not sure what corresponds to what:

These are the options it gives me in the Spectral branch vs.

These in regular Blender. So I was wondering, what’s what? In particular, what would be “regular RGB” for normal images and what would be the equivalent of Non-Color? Would that be the Float Data option?

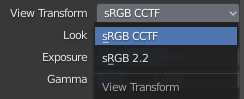

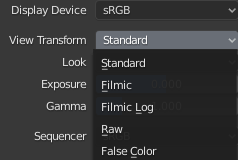

Similarly, for optimal comparability, what color management options should I pick for now?

I’ve thus far just gone with the default in the spectral branch. Not sure if that actually corresponds to

anything in regular Cycles.

Not having paid attention to that so far might well explain some of the differences I saw. Especially the generally darker look of Spectral Cycles.

Thanks for the effort testing.

I will give you a proper answer within a day but simply put:

sRGB is the best equivalent to the default view transform on my branch. You can use the default colour management in my branch and switch master to sRGB.

Things are marginally darker overall, I’m not entirely sure why this is but you should be able to account for this with a tiny shift in the exposure.

Textures should be in sRGB when coming in, but I haven’t had a good look at the input config options, I imagine the default should work.

Make sure the images you use are either tagged with the sRGB profile or untagged but in sRGB.

Which sRGB though

And textures shouldn’t always be sRGB is the thing. Normal maps and greyscale stuff shouldn’t be, right? Those are typically Non-Color, was my understanding so far.

Thanks

I’m away from the computer so can’t give you a proper answer right now, but you’re right - non colour data shouldn’t be interpreted - my guess is that linear might do the job, but worth checking.

I probably should have been clearer when writing the configuration and comments.

Currently there are only the bare minimum transforms listed. Blender doesn’t do any family filtering or such, so you get all transforms listed by default.

Float data is data; anything that doesn’t describe three lights / chromaticity / “colour”.

In terms of texture encodings, it’s a bit trickier. Technically, the encoding should describe the texture’s state. In many cases, sRGB is the wrong state, as they frequently were mastered on non-sRGB displays. See below for more nuanced information.

I likely should make two Displays, as this is the goal of the two options.

Display colourimetry describes both the colours of the primary lights and the display’s transfer functions. In a majority of cases, unless folks are on a moderately expensive display, the wager is that it is not an sRGB display. Why? Possibly cost, but that’s speculation.

That is, on commodity sRGB-like hardware, the display has a pure 2.2 power function baked into the decoding hardware. That is, it receives the encoded values and decodes with a power(RGB, 2.2) transfer function to get back to radiometric ratio light output. That 2.2 power function disqualifies it as an sRGB display, as according to specification, a “correct” sRGB display has the two part transfer function described in the specification. This has been validated by recording secretary Jack Holm for those folks seeking confirmation on a less than ideal specification’s language PDF.

For a majority of folks, use the sRGB-like commodity transfer function. If your display has a specific sRGB mode, use sRGB.

Same applies for encoding, as albedo encoding would have an impact on reflected light. The proper decoding will depend on how the image was encoded.

I’ll update the configuration to split the displays into two to make it more clear.

ADDENDUM: I’ve updated the configuration and included the Python generator for those interested. Appreciate testing by anyone here capable. If you can’t build, you can copy paste the config.ocio and the LUTs directory, with contents. The branch is located via this link. Thanks to @kram1032 for the question that led to peeling apart the display classes, as it makes for a much less confusing base to build on top of.

So this is the equivalent of “Non-Color”, then? Could you maybe name it the same as regular Blender if that’s the case? - Because right now I have to keep switching that option as I render the same scene in either version, since they mutually don’t know the other’s option.

Is that what CCTF stands for? And would there be a way of figuring out for sure which version is correct for a given screen? (I fully expect that you’re right in assuming my screen isn’t high end enough, but, like, “just in case” ![]() )

)

Also, my primary goal was comparability. Is what regular Blender lists as sRGB the same as BT.709 2.2 CCTF Colourspace or is it sRGB Colourspace (which, by the way, now that I read that, you went with British spelling which is inconsistent with Blender’s choices)

PS: Can’t wait for that Spectral Filmic you’ve been teasing~ ![]()

@smilebags can we have a build of that, please?

Are transparent shadows (i.e. filtered through a Transparent BSDF) included in that? Because right now those appear uncolored.

Spectral:

RGB:

(the gradient in that colored shadow isn’t a bug by the way. I tried out a variant on the surface absorption shader I posted above)

I suppose this is a good opportunity to integrate OCIO’s filename detection.

Specifically, it is named float because data can transport in a number of different encodings according to OCIO. Given spectral is a seismic shift, I figured it would be fine starting from a clean base for the time being.

It’s a good question.

First, CCTF stands for Colour Component Transfer Function. Second, there’s no easy way to determine what display type you have without a piece of hardware to measure the intensity of output. A light meter or colourimeter would be required I think. It’s plausible that a clever use of a DSLR with a raw encoding could work too I suppose.

The “Standard” is the sRGB inverse EOTF. Filmic on the other hand, went with the large numbers and is aimed at a pure 2.2, as most folks likely don’t have a higher end display.

Yeah it’s habit, sorry. Given only two or three folks are using it, didn’t leap out as a huge thing. Queen’s English and all…

If I weren’t such a meathead it would be done by now. I had to shift gears as the original effort it was based on was a fixed, wider gamut. Spectral makes the entire spectral locus the target, so I ended up having to rethink things, and that led to the shorter term goal of a reasonable set of wider-than-BT.709 RGB primaries to get up and running, that also play nicely with spectral effects. It’s a huge kettle of fish, given as you can see from your “WTF PURPLE?!?” tests, drills right into gamut mapping and all sorts of other problems.

As folks have also noticed, the Flying Spaghetti Monster UI isn’t managed. That means that even though the working reference is somewhat close to proper D65 BT.709, when you input values in the RGB picker, they go directly in as reference values. This means that on the way out, they are transformed from, without the to. Hence that numerical discrepancy.

TL;DR: The spectral effort puts the broken bits of Blender front and centre. I’m hoping some gradual development in Blender proper can be added to make this more easy.

Sorry I haven’t had time to work on this lately. Should have another build mid to late next week with the new config from Troy, if not some other improvement.