starting to get real Programmer Art Vibes, hah

Same material in basically the same scene except with natural lighting. It goes aggressively pink in sunlight.

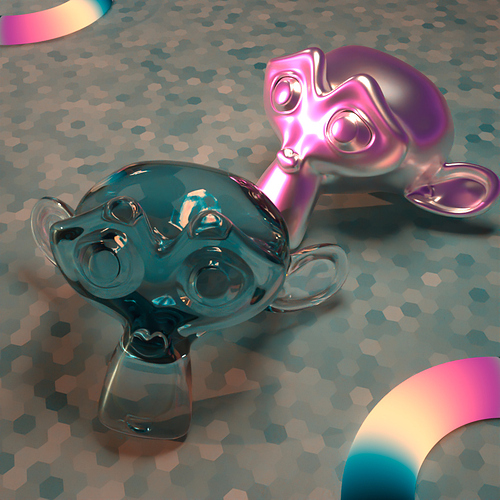

Additionally, there’s now absorptive glass with the same spectrum, and not one, but two toroidal emitters that show off the full extent of colors possible with this spectrum.

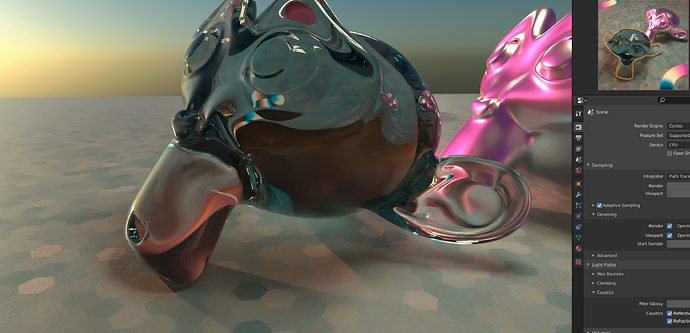

Additionally I did a Filmic version too.

sRGB

Filmic

In scenes like this, Filmic is naturally an advantage. Not surprising, of course.

Most interesting, I think, still is the metallic Suzanne and how extremely differently it looks on the front side which is reflecting the sky vs the backside which is reflecting the floor (which also works with the same spectrum) or itself.

Note: the refractive Suzanne features suspiciously little pink. However, I think that happens because so much of the pink light is already gone by the time it goes through the glass to hit the camera from that direction, that effectively, none of it is visible anymore.

Viewed from a different direction it ends up looking like this: