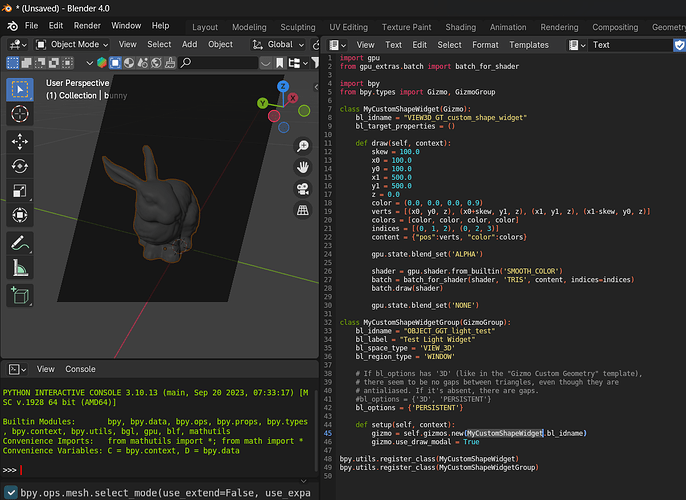

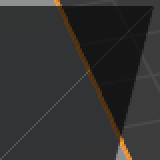

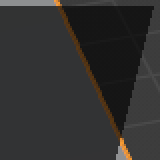

Sorry for bumping this thread again, but does an official solution exist for the problem of antialiased gaps between adjacent triangles? I get this jarring diagonal when trying to render a rectangle in the viewport:

(I should probably note that if blending is disabled, there seem to be no gaps, but the rectangle in question is supposed to have transparency in some cases)

I faced this problem before can you send the code so I can investigate it again?

Huh, it actually appears that this problem manifests only in certain scenarios (e.g. when drawing 2D gizmos, or when rendering to GPUOffScreen). 3D gizmos (as in the “Gizmo Custom Geometry” template) do not seem to show any gaps, despite being drawn with alpha-blending and antialiasing. For that matter, POST_PIXEL callbacks do not have this problem either, but they also seem to have antialiasing disabled.

Which means that Blender internally implements mechanisms to control this behavior, but does not expose them to Python scripts, which can be a pretty critical omission in certain cases (especially the lack of control over whether antialiasing is allowed). Hopefully this can be remedied ![]()

| 3D gizmo: | 2D gizmo: | POST_PIXEL: |

|---|---|---|

|

|

|

Here’s a script to reproduce this behavior for a 2D gizmo:

gizmo_2d_gaps_example.txt (1.6 KB)

I see ![]() Perhaps there’s a difference in our hardware? Regardless, it is still very important for python scripts to have as much control over GPU state as Blender itself, since at least it would allow script developers to try to fix/work around such issues on their own. I’ve compared GPU_state.h and gpu.state, and here are the differences I found:

Perhaps there’s a difference in our hardware? Regardless, it is still very important for python scripts to have as much control over GPU state as Blender itself, since at least it would allow script developers to try to fix/work around such issues on their own. I’ve compared GPU_state.h and gpu.state, and here are the differences I found:

Critical (without control over these, some stuff is plainly impossible to do or fix, like in my case):

- GPU_line_smooth

- GPU_polygon_smooth

Important (could be hard to do without in some advanced scenarios):

- Stencil-related stuff: eGPUStencilTest, eGPUStencilOp, GPU_stencil_test, GPU_stencil_reference_set, GPU_stencil_write_mask_set, GPU_stencil_compare_mask_set, GPU_stencil_mask_get, GPU_stencil_test_get, GPU_write_mask(eGPUWriteMask.GPU_WRITE_STENCIL)

Nice-to-have (provide some convenience/optimization, but their absence is hardly a roadblock):

- GPU_provoking_vertex

- GPU_depth_range

Probably too niche to be of much use to scripts (at least without an explanation of how to work with them):

- eGPUBlend: GPU_BLEND_OIT, GPU_BLEND_BACKGROUND, GPU_BLEND_CUSTOM, GPU_BLEND_ALPHA_UNDER_PREMUL

- GPU_logic_op_xor_set (just XOR on its own is probably not as useful as a full set of operations… or maybe some way to do such calculations in compute shaders)

By the way, a somewhat related question: how hard would it be to make GPUOffScreen.draw_view3d() work on its own, without requiring the bpy.types.SpaceView3D.draw_handler_add(draw, (), 'WINDOW', 'POST_PIXEL') context?

Right now, the only way I can make it work for the purposes of on-demand custom 3D view rendering (for generating previews or for obtaining the depth under the mouse) is by abusing the wm.redraw_timer operator – which, I suspect, is a very suboptimal approach performance-wise, since it renders the entire UI region (or even the whole window), instead of a small rectangle that I actually need (in some cases, just 1 pixel).

An additional downside of having to resort to wm.redraw_timer is that Blender seems to crash if more than one bpy.ops.wm.redraw_timer() is invoked per frame.

I think I need the stencil set/test for a pretty simple scenario right now, unless you can suggest an alternative:

I’m drawing a plane in 3D space that’s translucent and fixed in place, along with 3D lines radiating out from the mouse position. I need the line to be culled by depth against geometry in the Blender scene but not the plane. I can change the render order so the line gets drawn before the plane but then the part of the line below the plane gets alpha blended with the plane and changes color when I’d like the line to stay the same color if it has passed through the plane or not. Stencil is the only test I can think of that would let me not blend the plane where the line is.

Hi!

I’m currently writing a blender plugin that features a custom render-engine, using the GPU API.

Overall the API was pretty easy to use, but there where a few issues and things missing:

There seems to be no control over texture samplers, specifically filtering and wrapping.

In my shader i have to do both a special filtering and wrapping, so i would want to have none by default.

This looks possible in bgl, but not via the gpu API.

For buffers, there seems to be no support for SSBOs or any non-uniform storage in general besides textures.

Is there any support planned, especially since i saw that compute shaders are now possible?

Lastly, for rendering a mesh, i currently have to go through quite a bit of code to extract buffers in order to render them.

This forces me to cache models, and causes the edit-mode to be sometimes lagging due to necessary updates.

(Starting from here: f64render/mesh/mesh.py at c26050b4ce69d83ed8590afaba010aa3cb2e9bdb · HailToDodongo/f64render · GitHub)

Is there any easier way /helpers / direct integration to some internal data that the GPU API can make use of?

At least when using EEVEE it always feels smooth.

(For context, this renderer is only used for the viewport as it is part of a project for model creation to be used in games)

Hi, welcome to the forum and thanks for your feedback.

Overall the API was pretty easy to use, but there where a few issues and things missing:

Is this something you think you can contribute yourself? There is no active development on the API, so the quickest way to get any of this changed would be with a design proposal followed by a patch.

I’ve been looking through blenders code for the GPU API, but i’m not sure yet what would need to be done.

While i could now avoid the texture issues the only remaining real problem is mesh access (i hope that’s still related enough to the GPU API).

But it’s more an issue in understanding the whole interaction with the renderer on my part before i could make a proposal.

For context, i’m working on a custom renderer for an existing project which is mainly for creating game assets, so i exclusively need viewport rendering.

The conversion of model data into flat buffers for a shader takes ~1ms for 1k triangles, forcing me to cache things heavily.

While EEVEE seems to be responsive at all times. So i’m trying to understand if that is an issue on my end or if something could be done to make that access easier for the GPU API.

Hi!

I’d love to help out with the GPU module, mostly with drawn armatures and bones with MacOS

What would be the appropiate place to get started and become familiarized with the module? ![]()

Hi @Zophiekat your question is off-topic for this thread.

To help with MacOS drawing issues regarding armature and bones I would suggest:

- Report these issues as bugs.

- Get the basics out of the way (compile Blender, look at the code a bit).

- Reach out on the #module-eevee-viewport:blender.org channel on chat.blender.org.

@Zophiekat note that the issues you mentioned may have been fixed already on the Overlay Next PR: https://projects.blender.org/blender/blender/pulls/126474

hi,

could we get access to texture interpolation in GPUTexture? at least being able to choose between nearest and linear. to see those nice pixels. i can do pixelation in shader, but then i have problems with transparency, black from transparent area bleeds on half transparent pixels around it…

thanks

Do you consider a way of having access to a given 3d viewport’s gpu framebuffer?

Current gpu.state.active_framebuffer_get() is too limited and can only get the framebuffer of an specific 3d viewport if doing so inside a draw handler which is quite some limiting if you are writing tools or operators that need to read the depth buffer for example.