I have a suggestion for seriously reducing noise when using branched path tracing in cycles. It involves changing from Monte-Carlo methods to deterministic methods for calculating the direction of indirect rays sent out when a ray bounces. The implementation in cycles should be relatively easy(depending on the code). This method(which I will outline below), has the possibility of getting low-noise renders with as few as 5 diffuse samples per ray bounce.

First of all, the standard in renders is to use Monte-Carlo methods for deciding which direction to send out rays after a ray from the camera strikes a surface(in the world), and for sampling different parts of pixels(for anti-aliasing reasons). My method only changes the direction that the rays are sent out. Now, when a ray is sent out from the camera(or bounces off of something) and the ray strikes an object, several things usually happen:

- Rays are sent out from the bounce point towards lights to see if the point can see the light.

- A ray might be reflected or transmitted, depending on the material.

- Several rays are sent out in random directions to see about how much light the point can see.

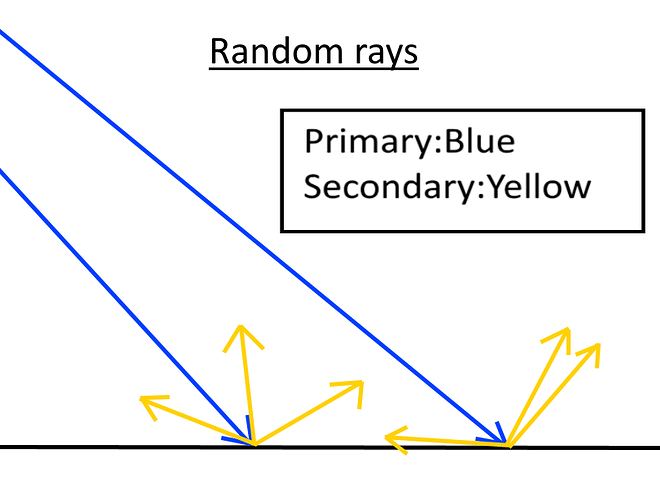

The last one is what causes a lot of noise in renders, as is 100% obvious from the diagram below.

As you can see, two rays(sent from adjacent pixels) strike a surface and send out secondary rays to calculate indirect illumination(i.e. not directly from lights). With Monte-Carlo methods, the rays are sent out randomly. This has a very high chance that the rays from one point will strike completely different areas than the rays from the other point, causing them to have very different lighting values. This shows up as grainy noise in the final render. When this happens, the solution is usually to up the number of rays sent out from each point(causing slower render times), but fortunately there is a better way.

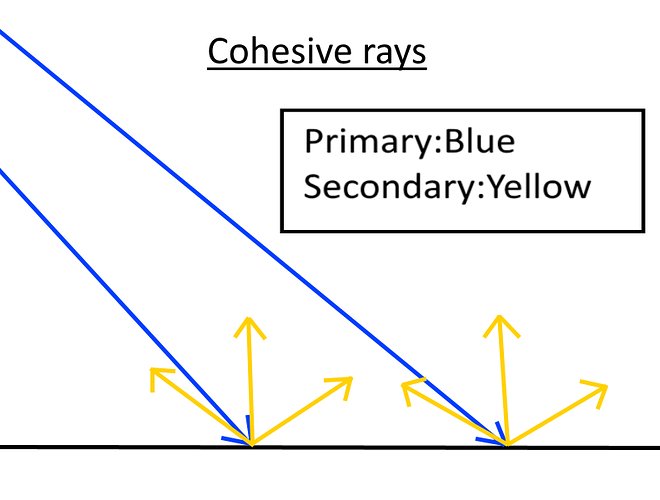

Instead of sending out rays randomly, send them out in a deterministic, regular, evenly-spaced pattern. This pattern would be globally-oriented, meaning that whenever two rays strike two points that are close to each other, the rays sent out from the two points follow similar paths. The diagram below shows this to be true:

With this method, the chances of the indirect rays striking the same areas are greatly improved, causing the two points to have similar lighting. How to decide the pattern though? There are undoubtedly many smarter people than me who have come up with clever ways to evenly distribute points over a hemisphere, so I’ll just give a brief suggestion here.

The first ray should point straight up(i.e. along the normal), and the next 4 should point in the four directions of the compass(N, E, S, W) while being angled 45:thermometer:(read: degrees) away from the first ray. The rest would then be distributed procedurally around these 5 points. One idea would be to add “levels”, where each level has several evenly distributed rays that are added to the other rays when that level is reached(that might be too complicated for users though). The pattern would only need to be generated at the start and whenever the user changes the number of indirect rays. The problem of exactly how to orient the pattern to the normal seems fairly easy, but I haven’t figured out the exact solution yet, so I’ll leave that to the more experienced people out there.

This method is not perfect, potentially causing artifacts in certain cases, but for people willing to work around them the speed-up would be huge. I hopefully wait for the implementation. Any criticism, hints or comments welcome. Thank you for your time and have a wonderful day. ![]()