I know it’s not a fix for what you want, but something a little quicker than disconnecting and reconnecting would be to just mute the node with the M hotkey.

Not at the moment. We did look into it early on but didn’t agree on anything rigorous.

Until we add similar functionality, I hope GPU accelerated denoising will land soon to alleviate this issue to some extent.

The problem with that is that is that it requires a refactor of the scheduling and evaluation mechanism to support skipping computations of dead branches, that is, if a mix node has a mix factor of 0, the nodes connected to the second input will not be computed. This is planned, but not in the near future.

I think this is still done by the shader editor system as well. I assume the big refactor they have planned in that area might also include the compositor nodes, since they’re looking to make the behavior similar to geometry nodes right?

I think the compositor currently also implements what you describe as being done by the shader system, but the compositor is a more complex system. Essentially, nodes in the compositor are either “pixel-wise” operations like Mix nodes or “buffered” operations like Denoise node, pixel-wise operations use the same system as the shader editor, so pixel-wise operations in dead branches might be skipped. The system simply inserts a big if statement around code.

But buffered operations are a different story. The scheduler needs to schedule predicate nodes first, and then the evaluator need to dynamically skip dead branches at runtime. Furthermore, most nodes with a predicate are actually pixel-wise, so scheduling those differently to put predicate branches first might lead to smaller shaders, more memory bandwidth, and slower performance. And there are probably plethora of other things that might make this more complicated. So not something I intent to implement in the near future.

And to be honest, I am against making the compositor behave like Geometry Nodes. There are things that we definitely want like having unified UI concepts and having common nodes like math and texture be the same between both systems to be able to share node assets between systems. But having fields for instance is not something that I like, so I am not sure if this i the direction we will be heading.

There still needs to be something in place to allow making node groups, right ? I thought that meant having to distinguish between single values and arrays of values

What I meant by fields is delaying evaluation until a “control flow” node. Of course node groups and distinction between single values and images will be supported.

Alright, I understand

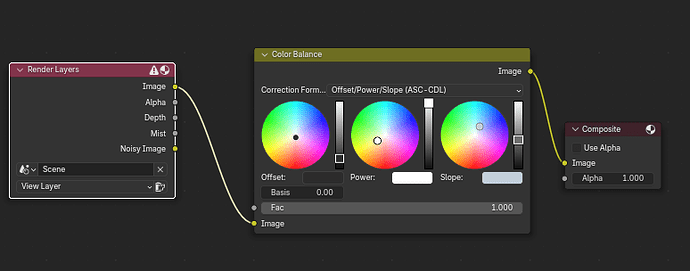

Blender is crashing a LOT whenever I add a node in between two others while the live compositor is enabled. Usually it’s color adjustment nodes (balance/contrast/saturation). My compositor setup couldn’t be more simple and yet adding a simple single node in there crashes pretty much every single time.

Please report a bug for those crashes.

Hey mate, sorry this is going to sound like a wish list:

I have been talking to people around town from different studios and deep compositing keeps coming up.

Are there any plans for this? Refresher: https://youtu.be/ySI-8Ns-6k0?si=omlFu4Io2C08ugQH

Also, will the new compositor be compatible with OpenFX I remember years ago bringing this up? https://openfx.org/

There are no immediate plans for deep compositing, but it is something that we are bound support in the future, and the new compositor architecture we are working on will make this possible.

OpenFX is an anti-feature for Blender as far as I know, so we have no intention of ever supporting it. But providing a python API for compositor nodes to allow authors to create add-ons for the compositor seems possible, and we can go that route alternatively.

Could you explain what you mean about OpenFX being an anti-feature for Blender? Seems like a natural fit as it is managed by the Linux Foundation and so I was surprised to hear that. Just wanting to understand.

@blaqkshadow It is true that OFX is now managed by ASWF, but Blender was actually against its inclusion in the first place, see Ton’s reasoning in the following discussion, which should also answer your question regarding it being an anti-feature:

Ah, I see! That makes sense. Thank you very much!

Does anybody have a small image/video demonstration of multi-pass viewport compositing for the release notes?

I have this x.com and this x.com as well as some other experiments. Is there anything specific you want to highlight? I can render out some prettier demos this afternoon.

Thanks!

@homspau Both look great. Thanks. Though it would be great if it is shorter in length, maybe less than 10 seconds or so.

So if you manage to shorten the Amy scene to just show the Blender interface while you go over the relevant effects (like at 0:25, 0:37, and 0:42), it will be great. You can attach an mp4 here and I will upload it to the release notes.

On it. I’ll do it this afternoon (PST). Thanks!

Hi Omar,

I hope this works. It’s not 10 seconds, If you feel we can go shorter I’ll do my best and cut a thing or two. Let me know if anything else is needed!

Thanks for your work!

Thanks you @homspau! I added the video to the release notes.