Really the ideal version of this would be an entire matrix-type socket, so you could pass conversions along rather than selecting from a list. Might not be in scope though.

One thing I’d especially love is far more extensive node-parity between geometry-, shader-, and Compositing nodes.

Like, for instance, why isn’t there a Vector Math node for compositing?

Some of that stuff can be done with Mix nodes, but quite often I find myself splitting up a color to apply the same exact math function to each of its components and combine later. I.e. exactly what a Vector Math node would do in one.

And if there were a matrix socket type, that would no doubt be brilliant to have in both the compositor for advanced color space manipulations, and geometry nodes for advanced 3D space manipulations.

Also useful to have, especially for a future with Blender working in larger working spaces, is

- every node, in the compositor and shader nodes alike, that involves an RGB value output or color swatch, should have a dropdown menu like textures do, selecting how that RGB value is to be interpreted. That selection would then always be converted to the scene linear role accordingly.

- trickily, the associated color picker ought to probably display colors in said space so you see what you get. Gotta colormanage accordingly.

- for similar reasons it may actually be nice to have the colorspace conversion node available in shader nodes as well.

Anyway, in terms of colorspaces, what I’d really like to be able to do is:

- select three primaries and a whitepoint in XYZ space

- get in return a pair of linear transformations that go XYZ → RGB based on those primaries and the whitepoint, and RGB → XYZ

The math for that can be found here:

http://www.brucelindbloom.com/index.html?Eqn_RGB_XYZ_Matrix.html

And if we had matrix inversions and matrix multiplications within blender’s various nodes, this could be built manually.

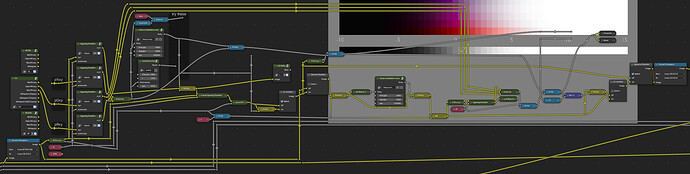

Right now you could technically build this by calculating the necessary matrix outside of blender and either registering it with OCIO (thereby making it available in the Colorspace Convert node) or manually building a monstrosity of nodes that do the exact matrix multiplication entry for entry.

Option1 would not be dynamic, so you can’t adjust creatively on the fly what you did

Option 2 is technically dynamic, but in a rather useless, unintuitive, and likely slow way

With some basic matrix manipulation functionality, you could basically completely rebuild everything AgX does from within the compositor, allowing you to make a dynamically adjustable version of AgX as a custom color management / grading chain inside the compositor