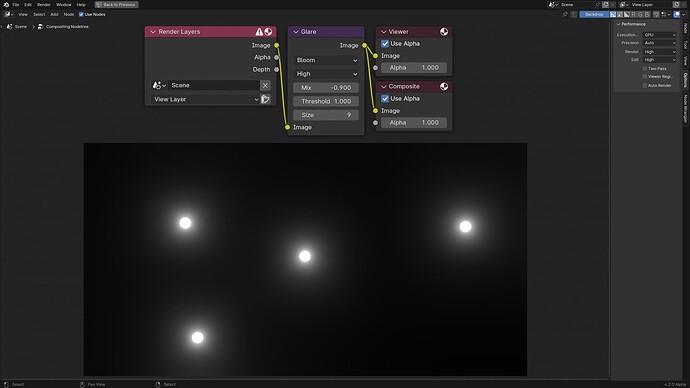

We added a new mode to the Glare node called Bloom, which you are probably familiar with from EEVEE.

- This is implemented for both CPU and GPU, so you should be able to get identical results.

- It is somewhat similar to the Fog Glow mode, except it is significantly faster to compute, has a smoother falloff, and greater spread.

- It is currently not “energy conserving”, so you should probably use a mix factor closer to -1. We plan to address that in the future.

- Bloom was previously temporarily used in place of Fog Glow for the GPU compositor, so Fog Glow is now unimplemented for GPU once again.

- The plan is to replace Fog Glow with a faster more physically accurate implementation for both CPU and GPU.