Can each camera have its own output resolution as well?

That would be awesome, right now you can only hack your way around with scripting.

I think that a lot of settings that are in Render and output tabs could be considered to become camera settings instead… Like color management, resolution, motion blur (Per camera ISO, Shutter speed, Aperture size), even frame range maybe (So the frame ranges of different cameras can overlap and output different files of different angles for the same frames), so on…

Resolution - most definitely.

I’ve been working on this arch viz project some years ago. Had to render the same model from various angles with many cameras. Some renders had to be one size and aspect ratio, others had to be different… I set up each frame to select a different camera, and I could render it all at once overnight with Ctrl+F12 and just go to sleep, but i had to manually input the resolution for each render frame, which really sucked.

An alternative solution would be to animate the resolution, not just camera changes, but resolution animation is disabled.

On “Blender Today”, Pablo said that the ability animate resolution was disabled because it would corrupt video files. But I think that’s a bad solution.

For starters: It’s highly recommended not to output a video file directly, and render each frame one by one as images, and connect them later. So, why have video output as an option at all? Instead, there could be a simple built-in tool to connect image sequences…

But if that’s not an option, the videos wouldn’t get corrupted if the resolution differences between frames are fixed with black bars.

And yet, a simple warning text for animated resolutions could be enough… The inability to animate resolution is a far bigger issue than the edge case of possibly getting a corrupted video…

Are you taking about something like render region?

if( output type is one of [all video formats] AND scene resolution has keyframes)

then {

show_user_warning(

"Output Video Types are incompatible with animated scene resolution values.

Videos need all frames to be exactly the same size"

) }

… however… I’ve used Lossless Cut to join together a dozen different video files of wildly different sizes and formats.

VLC on my laptops can play them just fine. Doesn’t work in VLC on phones/tablets/amazon firesticks.

That’s more due to desktop-VLC ability to digest almost anything. Videos with variable frame size are definitely non-standard

is there any info if we can import LUTs into the real-time compositor soon and use them for even more real-time artistic decisions?

Thank you!

I made a Right click select post a while ago about color grading. Vote up:

I’m interesting too, Ask about answer

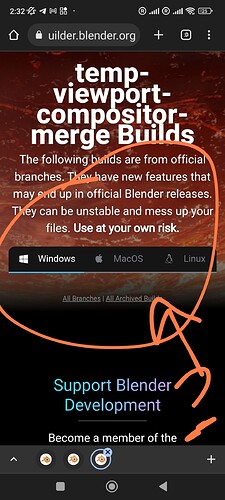

It’s already in master, just download the latest alpha and it’s there.

Oh, wow, what a fast response.

Thank U a lot. Highly appreciate it=)

to double down down on the fixed effect shaders like bloom and other standard techniques from before…

i created my own a bit more deliberate bloom effect with realtime compositor which requires me to blur the image strongly with 400 pixels wide to get similar bloomeffect like in eevee bloom. the difference on my rtx3060 is around 50% gpu usage(realtime compositor) just for the blur node vs 1 to 2 % with eevee bloom.

so maybe you guys still consider performance optimized shaders for ready usage? and or a code-node

For the real time compositor a really great thing would be the addition of Computational time needed for each node, just like in geometry nodes, that would show us how performant our setups are and where we could improve them if needed

yeah this could be everywhere in blender please , shader nodes too

I don’t think it’s possible.

At the moment, the geometry nodes are the only ones that are executed on the processor exactly as nodes. That is, often when we talk about the execution of a node, you do not say that the node has counted something.

Even geometry nodes have function nodes. They simply add a function* reference to the field’s stack. Most shaders work the same way. Nodes just copy strings (if simple).

And the speed of the work of a separate part of the code in the gpu … I don’t think that this is anything real at all.

And yes, even geometry nodes have problems caused by the fact that nodes work for a long time, for example, Delete Geometry. But the main difficulty lies in the nodes of mathematics. Because (so far) functions* of mathematics are also not logged with reference to the desired real node.

Showing time only seems real for something like saving to a file, or the whole composer… or some specific thing like code generation time…

Guys, is it possible or will be possible in future to mix Mist pass with viewport result in realtime ?

Questiin 2

Is it possible anyhow baked in Davinci resolve LUT add to the render by functionality or realtime compositor ?

Thank U a lot for answer. Blender Devs. you are the best, guys !!!

Same here. To get good looking bloom I needed parallel connected 16x fast gaussian blurs with based radius of 0.001 and multiplied each one output by 1.6 (adjustable) as size for next one in stack.

have a tutorial i can read?

Has @OmarEmaraDev back to work to the Real-time Compositor?? Can’t believe that blender 3.5 is almost out and we aren’t going to have the fog glow node in realtime