Hi everyone,

For the last couple of weeks, I have been working on implementing the Glare node, and as I mentioned before, the process have been difficult for a number of reasons. So just wanted to give a semi-technical update on that.

The Glare node have 4 modes of operation, each of which is covered in one of the following sections.

Ghosts

The Ghosts operations works by blurring the highlights multiple times, then summing those blurred highlights with some applied transformations and color modulations. The blur radius can be up to 16 pixels wide, but done using a recursive filter.

So I went ahead and implemented the recursive blur using a fourth order Deriche filter. But I couldn’t use it because it turned out to be numerically unstable for high sigma values, and we can’t use doubles like the existing implementation due to limited support or much worse performance on GPUs. Many of the tricks I used to workaround this issue didn’t work, like decoupling the causal and anti-causal sequences, dividing the images into blocks, and computing the coefficients using doubles on the CPU.

As far as can tell, the only solution is to lower the order of the filter or decompose the filter into two lower order filters, and since this is only significant for high sigma values, two parallel second order Van Vliet filters can be used because Van Vliet is more accurate for high sigma values. This is something I haven’t figured out yet.

As a compromise, we could use a direct separable convolution, which is faster any ways because we have a max blur radius of 16 pixels. The problem is that it will give a different result as shown in the following figure. But that difference might be unnoticeable in most cases since it will be scaled. Left is recursive, right is convolution.

Note that the recursive implementation is the less accurate one here, and since our recursive implementation that will be done will likely be more accurate that the existing one. That different might be intrinsic to the CPU compositor vs realtime compositor itself.

Simple Star and Streaks

The Simple Star is mostly a special case from the Streaks operation. Both operations work by blurring the highlights on multiple directions and adding all of the blur results. But the implementation is a recursive filter with the fade as a parameter that is applied up to 5 times for each streak. This has serious implications for performance due to the extensive use of global memory, interdependence between pixels along rows and columns, and many shader dispatches. So I decided that this can’t be implemented in its current form as it would not be good for performance.

Possible solutions is to measure the impulse response of the operation on a delta function whose value is the maximum highlight value on the CPU, then perform a direct convolution. But this would also be slow when the image contains very high highlight values. Another solution is to somehow derive a recursive filter based on the fade value using a closed form expression. But I am not sure if this is possible and I am not currently knowledgeable enough to do it.

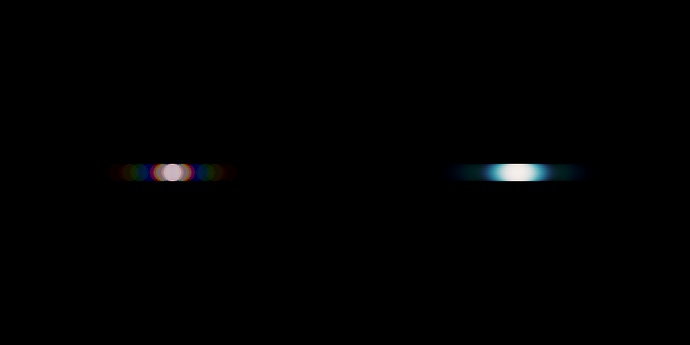

A compromise would be to try to replicate the same result of the operation using another technique, like the Deriche filter mentioned before, which I investigated and started to implement. But I realized that this would not be possible for certain configurations, for instance, when color modulation is in play, which I thought would just be different blur radii for different channels, but turned out to be rather different and more discrete as shown in the following figure. Left is existing color modulation, right is the color modulation I implemented.

So that compromise might not be feasible after all.

Fog Glow

The Fog Glow operation is very simple in principle. The highlights are just convolved with a simple filter. The origin of that particular filter is unknown as far as I can tell and seems to be chosen through visual judgement. The filter can be up to 512 pixels in size and is inseparable, so it is computationally unfeasible using direct convolution and can’t be approximated reliably using a recursive filter.

So the only feasible way to implement it is through frequency domain multiplication using an FFT/IFFT implementation. I still haven’t looked into this in details, so nothing much can be said about it except that it will take some time as an FFT implementation is hard to get right. Perhaps the best option is to port a library like vkFFT to our GPU module, but this haven’t been considered yet.