First I thought to file this as a bug, but it is one rather from POV of usability, which is a stretch, not to mention I might be the problem ![]()

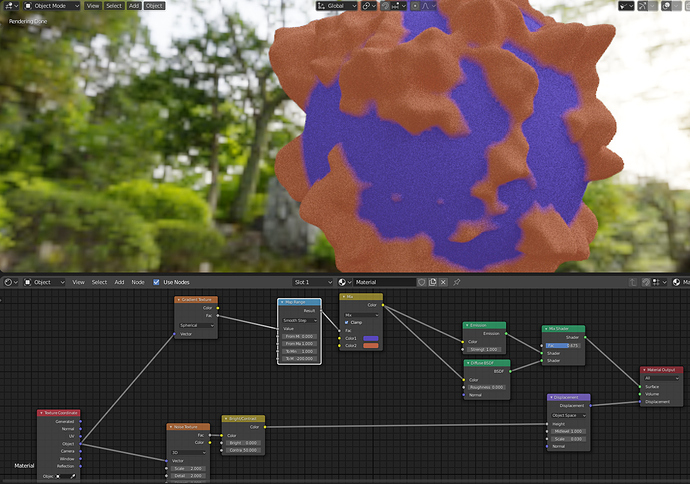

When Microdisplacement is driven by a procedural texture with Texture Coordinates->Object, the displacement changes Object Texture Coordinates making the texture effectively lost for shading purposes (it gets generated differently). Basically - you can’t color based on produced displacement. There is a coordinate discrepancy that easily becomes visually unacceptable. For example, when you have a hard depth threshold that stops matching it’s mask.

So I thought - what’s the big deal? Let’s invert the coordinate displacement with a few nodes. However that (as it seems) can’t work either, as it seems the Displacement Node produces actual displacement vectors for geometry generation, while for shading it outputs what would be the new displacement vectors, given already displaced geometry.

So the only workaround I found is to generate UVs and bake whole texture sequences (thankfully that’s an option thanks to sequence baking Addon), which isn’t exactly a great solution.

Any ideas as to what I might be getting wrong?

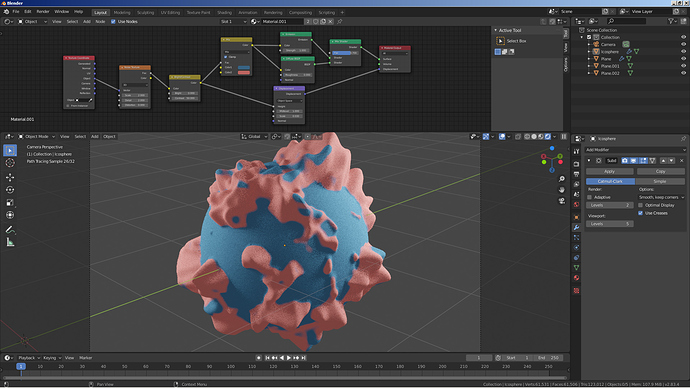

Illustration:

The point in using Object Coordinates is to deal with shapes that can’t be unfolded into UVs or default projections in a way usable for procedurals.

The point in using Object Coordinates is to deal with shapes that can’t be unfolded into UVs or default projections in a way usable for procedurals.