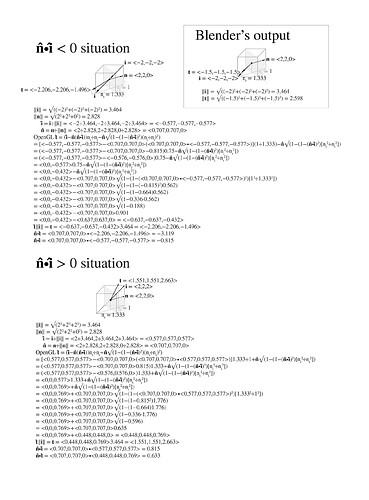

I’m told that Blender implemented OpenGL’s code as its Vector Refraction function. Here’s the code in mathematical function form: t = μ[i−(n•i)n]−√(1−μ²[1−(n•i)²])n

I notice that Blender’s output of refracted vector doesn’t make sense at all.

I have:

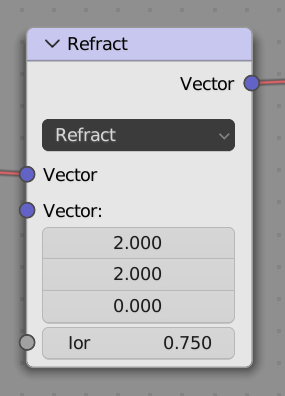

- incident vector i = <−2,−2,−2>

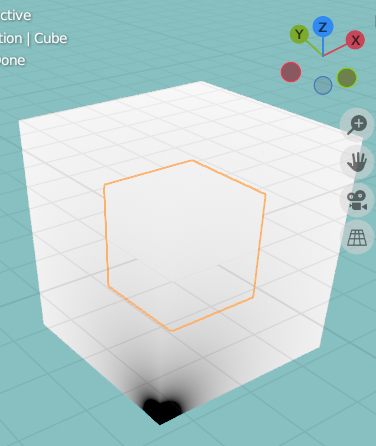

the black dot you see is i = <−2,−2,−2>)

- normal vector n = <2,2,0>

- Ratio of IORs: nᵢ/nₜ = 1/1.333 = 0.75

I input those pieces of information into Vector Refraction node like this

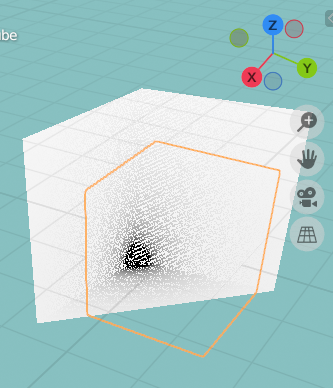

So, Blender’s refracted vector looks like this:

, it approximately is t = <−1.5,−1.5,−1.5> which doesn’t make sense since its magnitude isn’t even equal to the incident vector’s magnitude.

My calculation, please focus on the one at the top:

The refracted vector I outputted is what I believe should be what Blender is supposed to output, not t = <−1.5,−1.5,−1.5>.

What’s wrong with Blender? Did I do something wrong?

Please help. Thank you!