Name

Huajian Qiu

Contact

Email: [email protected]

Forum: wakin

Github: github.com/huajian1069

Synopsis

VR/AR/MR(or XR) is an entirely new way to interact with computer generated 3D worlds. It will bring rich and immersive experience to artists. The groundwork for OpenXR based XR support in Blender has been merged into master not long before, marking the end of Virtual Reality - milestone 1. To approach milestone 2: Continuous Immersive Drawing, I think controller Input/haptics support will naturally be the next step and waiting for design and engineering.

In this project, I will be working on bringing OpenXR Action based interaction support to Blender. At the end of the project, users will be able to navigate in the 3D world, select and inspect objects pointed at by controllers, based on stable and well-performing low level support. This project will lay the foundation of user input, therefore take the next step for milestone 2.

Benefits

VR navigation without external assistance

Currently, the scene inspection is based on Assisted VR, and that is having a person to assist you by controlling the Blender scene using mouse or keyboard. With controller input supports, users will be able to navigate and inspect scenes actively and independently. Google Earth VR has great user experience in this aspect, so it is attractive to realise that in Blender too.

Rich usability and visual assistance

When a user is wearing a headset, he/she is not aware of the real world. So it will provide better user experience if we draw a visualisation of controllers inside VR. Some related improvements include the rendering of laser sent from the controller, drawing a little dot on surfaces where the controller points at, providing haptic feedback when interacting with a virtual scroll or button.

Rich controllability

Input integration and mapping will give users the possibilities to take abstract VR actions(like “teleport”), physical motions(like flying) or graphic actions(like lensing) inside VR. It may also introduce some motion sickness at the same time. If integrating a blackfade or some optic flow reducing, it will make users feel more comfortable. I would prefer to define adding motion sickness reducing technique as stretch goal.

Base to continuous immersive workflow inside VR

With interaction support from controllers, some existing workflow will benefit from continuous immersive workflow. Grease pencil drawing, sculpting, drawing come into mind. The project will pave the way for deep integration of VR and Blender operators.

Future-proof adaption to VR/AR/MR industry standard

The OpenXR 1.0 specification was released on July 29th 2019. Almost all main players in the XR industry have publicly promised to support OpenXR. A further step towards VR support in master will give Blender an edge in the fast-pacing industry.

Deliverables

1. Minimum Viable Product: successful communication between Blender and controllers based on OpenXR action system

Success means Blender will be able to stably request input device states, such as 6 DoF positional and rotational tracking pose and button states, and control haptic events, like make controllers vibrate for a while as response. An error handling mechanism will be accompanied. Exception throw-and-catch mechanism is a good choice. When error occurs, informative messages will be forwarded to users.

2. Python query and API design

To enable abstract away low level communication details, an encapsulation in python will be designed to query controller states and events. The API will be designed in a convenient way for high level application. Based on previous work, one kind of navigation will be achieved to verify the success. Users will be able to navigate(fly) forward and backward along any direction which controller points in. I think it will be a straightforward application.

3. Picking support, finding what a controller points at

This includes two kinds of cases, pointing at an object with an actual surface and without a surface. Both cases will be dealt with. But the former will be more concerned. Based on the results of this section, users will be able to drag a controller selected object and rotate herself/himself around it to perform inspection.

4. Visualisation of controller, End-user documentation on controller input/haptic

The mesh of controller should be found in OpenXR runtime. It would be nice to have these rendered in the HMD session as visual assistance. The documentation for end-users will be straightforward, referring to the precursor project last year.

5. Extending VR debugging utilities, Abstraction of code with good maintainability

Since graphics and XR applications are hard for debugging, I will take advantage of current debugging utilities and extend it in need of my development. It may be helpful to insert custom layers in-between Blender and OpenXR runtime. The abstraction should be carefully designed on the Ghost level or port the code into a new XR module. At the end of the project, the code will be tested and cleaned to be consistent with the code base. Basically, the last two deliverables are aimed at being friendly to future end-users and developers.

Project Details

About controller states

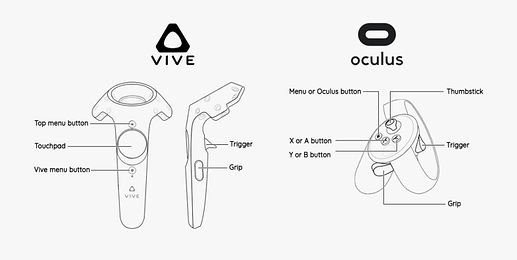

Most controllers have one trigger button, one or two grip button, one menu button, one touchpad / thumb-stick, and several other keys. Some key may has multiple work modes and also several states that can be detected, such as click, long press, touch, double click. These states are defined as input sources.

OpenXR Action system support

There are several concepts introduced by OpenXR to learn for implementation. Applications (Blender) communicate with controllers’ inputs/haptics using Actions. Each Action has its state(of boolean, float, vector2…type) and is bonded to input sources according to the interaction profile. Blender needs to create actions at OpenXR initialisation time and later used to request controller state, create action spaces, or control haptic events.

Interaction profile path is of the form:

“/interaction_profiles/<vendor_name>/<type_name>”

Input source is identified and referenced by path name strings, with the following pattern:

…/input/<identifier>[<location>][/<component>]

An example of listening click of trigger button:

“/user/hand/right/input/trigger/click”

The final step is to repeatedly synchronise and query the input action states.

Finding what a controller points at

For objects with real geometry and without real geometry (e.g. lights, cameras, empties), I will choose OpenGL to pick the point on object. For the purpose of visual assistance, I will draw a little dot on surfaces the controller points at with help of the depth buffer of the current frame.

Use-case driven development

I also propose to do a use-case driven development, enabling some interactive use cases. These are based on querying and mapping common keys’ states to operations in Blender. All of them are straightforward and can be used as a testing to verify success and find bugs.

Proposed use cases:

- I wish to navigate(fly) in the scene by performing translation along any direction which controller points in.

- I wish to rotate the scene around me along the z-axis(because other rotations make people feel awkward) by the controller.

- I wish to drag a controller selected object and rotate myself around it to perform inspection.

Blender side integration

As I understand, when a user navigates in a scene with a first person perspective, it is equivalent to move the virtual camera in the scene. If I am correct, it would be enough to modify the position and bearing for a virtual camera according to controller inputs.

Related projects

Core Support of Virtual Reality Headsets through OpenXR - GSoC

It aims to bring stable and well performing OpenXR based VR rendering support into the core of Blender. Assisted VR experience is partially realised with the help of this project. I often refer to this project for kicking off my work.

Virtual Reality - Milestone 1 - Scene Inspection

This is the continuum of the GSoC project above with more patches and new features like Mirrored VR view, location bookmarks. My project will be based on these previous achievements and share many utilities.

Virtual Reality - Milestone 2 - Continuous Immersive Drawing

This is the parent project of this GSoC project, with a big picture to enable grease pencil drawing in VR. I will try to contribute to this milestone by finishing this GSoC project well.

Testing Platforms

I have an HTC Vive Pro Eye, an Oculus Rift at my host laboratory. If necessary, I will also try to apply for a window mixed reality device. Various controllers are available at hands. The choice is dependent on the current released OpenXR runtime. Then headset and controllers associated with WMR, Oculus would be the best choice.

Project Schedule

May 4 - June 1 : community bonding period:

- getting familiar with prior work done by Julian

- play around with OpenXR Action system

- Setup of development environment

Actual start of the Work Period

June 1 - June 29:

- Define action sets and meaningful actions.

- Bind actions and input source path.

- Set up debugging utilities

June 29 - July 3: First evaluations:

- MVP should be done at this point.

- Navigation in-VR should be realised with performing translation along direction controller pointed in.

June 3 - July 27:

- Query input states and events

- Write Python API

- find where the controller point s by OpenGL

July 27 - July 31: Second evaluations:

- Picking support should be realised. Users should be able to see one little dot on the surface of the object, select an object and inspect around it.

July 31 - August 24:

- Design UI of new feature

- add visualisation of controller

- Add technique to reduce motion sickness(stretch goal)

- writing documents

August 24 - August 31: submit code and evaluations

- End-user Documentation

- Mergeable branch with XR input support

- Weekly reports and final reports

I will be based in Lausanne, Switzerland during the summer. Therefore, I will be working in the GMT+2 time zone. And I will be available 35 - 45 hours per week. Since our summer vacation is from June to September, I believe that I have enough time to complete the project.

Bio

I have been a developer with focus on graphics and visualisation for years. In the past, I usually spent my time at projects inside campus and this is my first time to take a stab at approaching Blender development. I’ve enjoyed building customised tools towards a big picture in mind. Besides, I am a master student studying computational science and engineering at EPFL, living in Lausanne CH.

I speak C as my mother language, but I also have rich experience in other programming languages such as C++, Python, and Java. I started to write C programs from high school, from a competition about controlling a group of wheel-robots to play simplified basketball.

I have been using Blender for 3 years since a chance to build a simulated fly model from CT-scanned image stacks. It is about optimising the mesh quality and animating the fly to move as recorded in video.

I am also a big fan of VR games. I am currently involved in doing a research project about how to reduce cyber sickness of users during VR games at the host lab in my university. It gives me a scarce chance to be close to many virtually reality devices and experts. I hope to start a VR professional career and make continuous contributions to Blender with this project.

After all, it’s going as planned. Would just mention how your work takes the next step for milestone 2.

After all, it’s going as planned. Would just mention how your work takes the next step for milestone 2.