I just LOVE Cycles SSS, including the new Anisotropy and IOR options. As this thread is called Cycles-X feedback, I guess expression of Cycles love is allowed as well.

Concept by the talented Kevin Keele.

I just LOVE Cycles SSS, including the new Anisotropy and IOR options. As this thread is called Cycles-X feedback, I guess expression of Cycles love is allowed as well.

Concept by the talented Kevin Keele.

@brecht

Can we see some CPU performance improvements in the next official release? I have i5 8th gen CPU but rendering with noise threshold with these options:

Noise threshold: 0.0

Max sample: 300

Min sample: 0

Tile Size on with 100 px tile size

…seems to be twice as slow on my doughnut scene! (From 6 mins to 12 mins). Previously I had rendered with branched path tracing having 30 (total) samples on everything except 150 for glossy and 300 for transmission. Tile size was probably 200 px in Cycles. (200 px in Cycles X didn’t make any difference so I am stuck with 100 px).

Tiles in Cycles-X are used primarily to work around memory issues. Ideally you should stick with large tiles sizes (E.G. 2048px). However in some cases reducing the tile size can increase the performance (but don’t decrease the tile size too much or you will experience slow downs again).

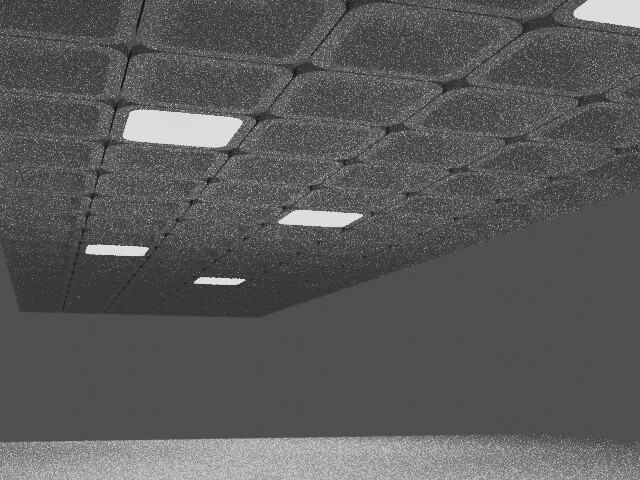

Here’s an example of a scene that benefits from a decreased tiles size:

Is there any chance of the old SSS methods to come back?

I have models that rely on Cubic and the other methods just don’t look right.

It just sucks to see choice removed, especially since these are simpler algorithms that are probably already used elsewhere in Blender.

It didn’t work by altering the pixel count! Tried with 1024 px and also 500 px.

@brecht Maybe bringing back the branched path tracing will help low end CPUs?

The point is that the tile size should be left at default 2048 for most cases. Tile rendering was removed at one point because progressive refined rendering has been sped up enough in Cycles X that it was on par with tiled rendering so tiles are not needed for speed anymore. But they found out that when rendering high resolution, the high res buffer would be too memory-heavy and crash blender so they brought back tiled rendering just for that. They set the default size to be 2k so that tiles will only be used in higher resolution rendering. It should not be adjusted for speed now, since speed was not the purpose of tiled rendering anymore.

I think it’s a bad idea to use noise threshold 0 (automatic), since you just lost your control over the quality of the image this way. The ideal workflow now has changed from sample-based to noise-based.

So basically you would use the default max sample 4096 most of the time, and use the noise threshold to adjust the quality you want to have. Cycles will automatically detect whether each part of your image gets to the noise level you set, it will stop sampling the part that has reached the noise level, and continue sample parts with more noise. So now the workflow would be to use 4096 as max sample most of the time, and use a noise threshold of your liking to determine the quality of your final render. The smaller the threshold the better the quality. Default 0.01 is already quite good, and I usually just use 0.05 which is not as good quality but faster.

It has also been discussed in another topic but I don’t think branched tracing would come back. Here was the thread:

Regarding the problem of Cycles-X rendering high resolution crash, in Cycles-X, after rendering all the collages, the overall noise reduction will be performed. If the image resolution is too large, the renderer will crash, but in blender 2.93 it is It will not crash, because it has already reduced the noise when rendering the collage, and will not cause the graphics card memory to overflow.

In Blender 3.1 and higher OptiX will denoise the image in 4096x4096 chunks. This will reduce the VRAM usage when denoising. So give 3.1 a try and see if it helps.

In Blender 2.83 and higher I believe OIDN will denoise the image in 6GB chucks.

Why is clearcoat set to 1.45 by default in the PBR, the use of clear coat is not only rare but it’s confusing a lot of people shifting to 3.x from 2.9x and always end up turning it off, it’s not something I can even fix with a default startup file. Can I at least know the motive behind the change or is it just for the sake of changing something? Where is that git commit? I wanna have a deep and personal talk with the dev that decided it was a marvelous idea to randomly enable a slider just so that the default mat looks good

-With love

I tried to render in version 3.1, but the high-resolution image still crashes. It will reduce the noise as a whole after all the blocks are rendered, instead of reducing the noise for each block separately.

Which build are you testing? 1.45 for clearcoat mount doesn’t even make sense because maximum is 1. But 1.45 is the default value for IOR, so maybe something got mixed up?

nevermind it was a plugin, the whole studio got confused, it’s been addressed  feel like an idiot now

feel like an idiot now

Am i the only one experiencing a lot of freeze-to-desktop in heavy scenes?

It’s very weird, it’s like cycles 3Dviewport is dying, everything else in the ui is reactive but somehow the 3D viewport died and is frozen

Hi, I also have a similar problem. I can’t reproduce it yet. And I’m still looking for why this happens sometimes. Generally 3D viewport suddenly becomes very vey laggy - not usable. Onyly solution - restarting the program. This usually occurs when switching between Solid and Render view, but also when changing system windows in Windows (Alt + Tab).

Heavy 2GB project, 2k+ objects, a lot of geo nodes and materials.

Testing in Blender 3.0 Stable and 3.1Alpha

I’m still looking for the cause if this is my project or bug (although I haven’t seen anything like this in the builds from a month ago)

I had a problematic file. Can’t share. It was a multiple room scene, one of which filled with downloaded cloths like folded and hangin shirts or coats. Blender crashed most of the time when making F12 renders, while viewport cycles performed quite good…

I mentioned those cloth objects because now i fixed the problem: I just applied all modifiers and joined the objects into 3 “macro-cloth” objects. Now the scene renders smooth as butter. Dunno really where the problem was. Ok, it was in the cloth object probably, but yet I can’t say what was causing the random (but frequent) crashes. The console said something about auto-smooth not enabled. I enabled it and yet crashes…

one more thing that I didn’t see in other scenes: when making F12 render the console write a long list of “Using fallback”…

What is that???

I’m still seeing strengths with each of the denoisers. NLM seems to do well in medium-noise situations, where there isn’t much colour variation and so more of a reliance on the normals data pass. I almost always increase the NLM filter size from 8px to either 16 or 24px which usually gets rid of any blotches and temporal issues. As some of the examples earlier in this topic show, OIDN seems to smooth over low contrast areas where NLM preserves detail.

Temporal flickering comparison video between NLM and OIDN with 2.93.4:

Above render was using 128spp with color + albedo + normal. Unfiltered image below:

I’ve also just tested the above in 3.0.0 (which I assume has OIDN 1.4.0?) although it now adds much less to the render time (a couple of extra seconds instead of 12) the blotches and temporal flickering remain. From looking at the renders on this thread, OIDN 1.4.0 does a better job at retaining fine texture compared to before, but I haven’t tested that so far.

Another factor here is that not everyone will be able to use OptiX (non Nvidia RTX users) which leaves only OIDN for some.

A separate issue with OptiX in 3.0.0 is that some areas remain noisy compared to 2.93.6. See these screenshots:

2.93.6:

It’s quite possible I’ve overlooked a setting somewhere, but for some reason in 3.0.0 there’s hardly any filtering of the blue radial gradients but there is on the cube. I haven’t found out why yet. (The ‘color’ input pass isn’t an option 3.0.0, so I had to use albedo instead.)

Temporal aside, about the blotches, are you sure you are using both albedo and normal passes and have prefiltering set to None or Accurate?

Although I agree that not all GPUs can use OptiX, I would correct that the majority of Nvidia cards users should be able to use it, not just RTX. But actually OIDN does a better job than OptiX, people use OptiX mainly because it is faster on their GPU. Also it is worth mentioning that OptiX will have temporal denosing soon so hold on to that.

Maybe this should have a bug report?

I’ve tried changing the noise threshold, various tile sizes, and even rendering without tiles, but in vain. Using noise threshold and max 300 samples doesn’t complete the render before 12 or 10 minutes no matter whatever what I try to do. In 2.93, it rendered in just 6 minutes with 300 max (on transmission), 240 (glossy) and 30 min (for rest options) with BPT. (In Blender Guru doughnut and cup scene, which he did in v2.83. I might share the file if asked by developers)

@brecht I NEED AN ANSWER!!

I don’t have a very powerful PC, but this is unfair!

Post your scene so that we see what you are dealing with. It is hard to speculate on such issues without seeing the scene.

I will only provide the scene on the words of a Blender Developer only.

It’s basically useless to take it because in PCs with less no. of cores, it’s twice as slow! I tried so hard but couldn’t make the render in less than 10 minutes with 300 max samples. In 2.93, it took just 6 minutes to render. @brecht ! @leesonw !