By using multiple AOVs eg. ID1 ID2 etc.so 3 shaders can access the ID1 AOV and the next 3 can access ID2 and so on.

Another advantage over cryptomatte is that you can plug in painted masks.

For a character you could paint a mask for the lips, the eyes and the ears into the red green and blue channel of an AOV.

You can still use painted masks with cryptomatte, just assign it a seperate material and it’ll pass through.

If that doesnt work for you, you can still use the AOV output from the shader for custom mattes.

The point of Cryptomatte was to solve exactly this issue you’re having. It solves 95% of matte issues for comp, the rest is bespoke anyway. No longer having to seperate based on RGB and an un-managble amount of render layers and files to keep track of.

In fact, you can use REGEX in the nuke cryptomatte tool to do procedural selection, like *metal* to select anything/any object/any material containing the word “metal”.

You’re much better off adopting Cryptomatte to its full potential.

I am using cryptomattes wherever I can

But in some cases it is good to know how else you can solve it.

In Arnold I can easily change the bit depth for each pass. In Redshift, I have to render crypto passes (together with other utility passes) in a seperate render to save rendertime and memory.

I assume your 12mb exr file does not contain a lot of other passes?

The cryptomattes are saved as a separate file-out from the compositor with 32bit ZIP (as required by Crypto).

But our beauty renders that follows suit are a whopping 90mb pr frame pr layer. Even when saving as 16bit float. We just had a ton of extra passes.

But for matting saving Cryptomatte as a separate file makes it very very manageable.

Keep in mind that 2.92 fixed the bug/issue with Cryptomatte manifest not being written by the compositor.

Thanks for reply, statix.

My statement of Cryptomatte being random was not right and good you corrected, so there is no misinformation.

I just wrote this below message, but noticed that you suggested partial naming selection from Cryptomatte in comp to allow selection of similar elements, which is something that sounds like working solution and I definitely I will take a look of that. So, below message is maybe not that relevant, but I keep it, because it is a well working method for standard RGB mattes. All this discussion helps me to get more knowledge in Blender for production use.

The specification of Cryptomatte IDs is different how I’d like it, as I’d like to prepare assets in a way that multiple different assets could share same ID regardless of not sharing naming or material. So, mattes could be manually assigned similar way like Object and Material Indexes.

Example:

Building_A.blend

-

Building_A_windows (matte 1)

-

Building_A_walls (matte 2)

…

Building_B.blend -

Building_B_windows (matte 1)

-

Building_B_walls (matte 2)

…

Building_A.blend -

Building_C_windows (matte 1)

-

Building_C_walls (matte 2)

… -

Above assets would be linked to city scene where render output has all similar elements as single matte to adjust all at once. I know that can be setuped with Cryptomatte also, but this is probably my limited Blender knowledge, so that I don’t know how to assign custom attributes for Cryptomatte in Blender.

-

If all similar objects (eg. windows) would have same material, then those would be selectable at once with Cryptomatte standard material selection, but this wouldn’t be the case always. That’s why I personally like Object Index and Material Index.

I could refer my described case and cryptomatte custom attributes to another software, but can’t do it and also this is not really a comparison of Blender to something else, but for me to figure out solid way to specify fixed mattes early in production. No matter what the solution is, as long as it’s easy.

For bit depths storage use difference in my 2000x2000px render comparison for different bit depth file sizes is more than what is sounds in your case. Do you render everything in 32bit?

Cycles rendered image comparison with about 20 AOV layers:

half-float (16bit): 225mb

full-float (32bit): 415mb.

Both multilayer EXR with ZIPS compression.

EDIT for statix: Just noticed that you save Cryptos as a separate outputs, so that answered my 16vs32bit question and why you have less storage needed. That is probably the way I need to do as well.

Just replied above, but we save our crypto as 32bit only, the beauty are always 16bit float unless we need precision for pworld like passes. You can save both files from the same render in the compositor so there’s very little to no overhead anyway.

(Also, if you’re super stingy about storage space you can render your beauty render and aovs as DWAAB compression, which is effectively JPEG like compression for float images. You can get TINY file sizes, but decoding time is slower than ZIP or PIZ)

Cryptomatte object and Cryptomatte material works -exactly- like object and material ID, but without the aliasing issues and with all the benefits of proceduralism.

If your naming is consistent you can still do selections like *Building_A_windows* or just *A_windows* to predifine your matte selection in comp.

Keep in mind that you can also combine crypto material and crypto object selections.

So something like

- Select all materials containing

*window* - But only in objects

*BuildingVariationA*

Is a perfectly valid way of doing it. And it would be consistent between renders, shots, sequences etc.

We used to do full on RGB mattes 10 years back for vfx, but Crypto and Deep made comp and lookdev so much more pleasurable/flexible.

Yes! Using the compositors File Output Node is definitely the way to go

I just wanted to add that now the compositor file output node does store metadata, so it is possible to rename the passes there as you want (so they fit your pipeline naming conventions) and store the cryptomatte passes in the same file. It works fine in nuke at least (or it worked, I see some bug reports saying that fileOuput node saving is broken, but it seems that J Bakker is looking into it)

Cryptomatte object and Cryptomatte material works -exactly- like object and material ID, but without the aliasing issues and with all the benefits of proceduralism.

100% agreed, @statix . Although SakuP’s point about being able to create custom layers, like it can be done in other DCC, is perfectly valid.

Hi all, who’s been helping with suggestions to my matte output issue.

txo: Thanks for the note about compositor passes renaming in File Output. That I’ve actually done already and works as needed.

I conclude with my last post for the matte topic that although there isn’t an existing Cycles option to do this directly, I have enough possibilities to cover my needs. But wouldn’t hurt adding RGB matte AOVs directly in Cycles also.

Below are 2 best options in my opinion at the moment.

1) Cryptomatte: This is technically best if using compositing software that supports it. Cryptomatte passes needs to be saved as a separate 32bit file output unless main render output is also 32bit. For my productions this works.

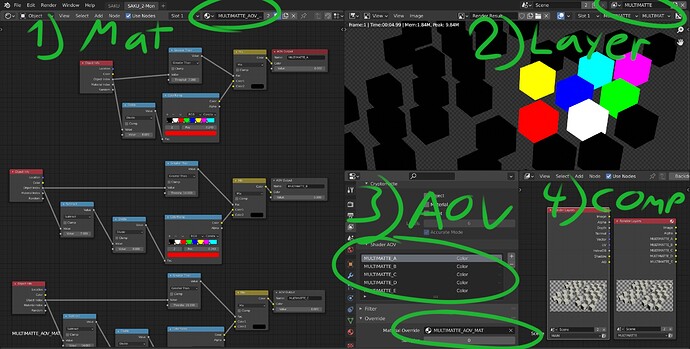

2) Create a separate matte pass with override material and pre-defined AOVs. This will do separate render for matte, but it is fast to render anyway. Can be included (connected to) in main File Output in compositor regardless of bit depth or saved separately with additional File Output. This will work for most compositing and image processing software. Attached image shows 4 steps to prepare for startup file. Example image has 5 matte AOV setups with 7 mattes each to work for object indexes of 1-35.

Additional note about AOVs: These could be prepared directly in main renderpass also, which wouldn’t require separate render with override material. But in that case to be able to use Object Index values AOV setup is needed to copy to each material in scene instead of in just one override material.

Extra: Actually World Position AOV is missing also in Cycles. In my previous production that was rendered pretty much with the 2nd option method as a manually created World Position shader render pass, because there is no existing pass for that.

Here’s how we do it

For every scene layer (ie foreground, midground, background etc) we render 3 passes

Pass 1 - Beauty

- Beauty, all shading components and denoised. 16bit float ZIP.

- Cryptomatte rendered and split out from the same render as the beauty. Saved as 32bit float ZIP

Pass2 - Utility

This is applied as a override to the view layer / scene. So all objects gets the same shader applied. This can cause issues if there are shaders that are dependent on transparency etc (first ray principle anyway etc). But works for 80% of our cases.

- Utility, AUX texture passes, extra AOV mattes, Pworld

Pass3 - Volumetric lighting

- A shader with holdout overides all the shaders in the scene. This is computationally much faster than setting the collection layer as “holdout” as it still loads textures and computes shading.

- Rendered with CPU and Branched Path Tracing as its MUCH MUCH cleaner and MUCH faster than Path Tracing and GPU.

That covers 80-90% of our needs. The rest is a shot by shot decision.

Looking at the popularity of this tweet, node output previews are a very hot, desired item.

It’s a good idea, but almost every single other 3D software I know of has broken its teeth on it, usually because of the scale of procedural textures. When using object/world space mapping coords for procedural textures, they often become either too small or too large to display anything meaningful in the thumbnails.

The way around that would be to expose some sort of “preview size” parameter, which would define how large the surface the procedural texture is previewed on is. So you could define if the are the thumbnail displays is let’s say 50x50 centimeters or 50x50 meters, depending on scale of the scene and material you are working on.

What I am worried though is that it will be implemented in the typical Blender (wrong) way. Such option, if existing, should be stored per .blend file, because users will tend to work on scenes of varying scales.

But…

I am sure that if some Blender developer decides to do that, it will either be a user preferences option, in which case users will not be using it, because otherwise they would have to keep opening user preferences and changing it between different .blend files they work on. Or in other extreme, it would be unique per material, in which case the user would always have to adjust the setting for every new material they create, once again making it too much of a chore to make thumbnails worth using.

So bottom line is that while it’s a nice idea, the implementation is what matters, and the implementation details is where this will most likely fail, given the track record of development decisions.

I agree that scale should be taken into account.

Scale is an issue also in material preview (material property panel).

I wish an input field where the user can set the preview object (spehere, matball, splash etc…) size.

I have tested the CyclesX build and I am also very impressed, especially with the improved interactivity/update rate.

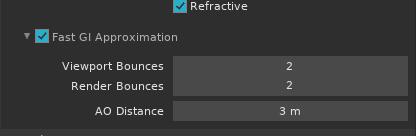

Only thing that caught me off guard is that there’s the same old UI/UX failure. The simplify bounces option was moved and renamed, which is good:

But there is absolutely no indication anywhere in the whole UI that it relies on Ambient Occlusion factor value, which is in completely different rollout in completely different properties tab (world). And nowhere in any of the Fast GI Approximation setting tooltips is there even any mention of the AO Factor value.

Why not make this its own separate thing, completely decoupled from AO?

50 posts were merged into an existing topic: Taking a look at OptiX 7.3 temporal denoising for Cycles

Is the rendering single pass related to this request thread?

For example I can’t render AO pass separately, it also render color which waste my time.

AO distance tells this is related to AO, doesn’t it?

Also each tooltip is quite self-explanatory and refers to AO.

The reason why it is not decoupled I think is that it would imply a double AO calculation in case one wants to use regular AO for rendering, or have an AO in output passes.

Yet it would be nice to be able to set an override background for it independent from the world settings.

This way you could have an HDR or whatever you like in the background and still manage strength and tint of the fast GI approximation. Tinted light is often an issue with this cool feature

No, it doesn’t. If you are a new user, you have no clue there’s an additional Ambient Occlusion rollout in the world tab, which contains the “Factor” value which is mentioned nowhere in the Fast GI Approximation UI.

You are doing the common mistake of thinking about it from the perspective of long time Blender user who has understanding of how all the different layers of nonsense cascade on top of each other and tie together, but if a new user tries to use Fast GI approximation feature, they will fail because they have no idea there’s one more external parameter, which is absolutely key to utilizing Fast GI Approximation correctly.

you mean the AO factor don’t you? you’re right, it should fit there too.

And maybe this multiplier could be easily decoupled from main AO to work only for fast GI?

On the broader topic I’m not so keen on new users accomodations. There’s a whole manual available and stuff can be easily found there, with explanations too.

I’m now in the process of learning Unreal, and in this perspective, their UI is a pure nightmare IMHO. Everyone can do better I guess