Did https://developer.blender.org/rBdcdcc234884205a8f51eb3a56d0482078105e431

Fix the issue for you?

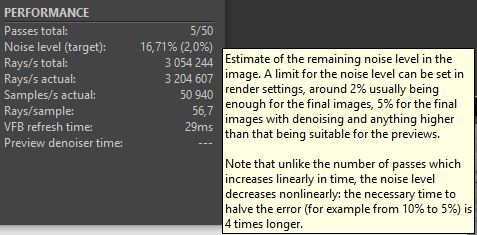

ok we have now adaptive sampling since 2.83 is here. i would suggest to make cycles to display noise level while rendering of tile or entire image. or even viewport. why do we need that ? many render engines have this feature. it’s the best way to define what noise treshold do we need to set in the adaptivity relating the acceptable visual quality of image. instead of guessing the noise level i would need to see it in realtime while rendering and definig it by stopping the render, set the treshold and start render again with the right optimised treshold.

the corona render is a good example of that.

Any new about curvature in cycles? now we are in production and the main reason to don’t use cycles in 100% of the production is that we don’t have a curvature output like substance.

I know that it can be appear few, but the curvature node is the only step, from a cycles pov, to convert blender in a 100% usable texture creator. With that simple node we could do anything from a texture point of view inside blender (at least for a normal asset)

There are some technicals problems to implement this node?

Nvlink support would be really nice. Redshift has it working well on Windows and Linux supporting memory pooling. Looks like it’s working for geometry too. Of course, there’s some overhead so you don’t get exactly linear scaling, but I would much rather be able to run 2 x 2080 Ti rather than buy a single RTX Titan just for the extra VRAM. Given that the 3080 Ti looks like it will only have 12GB of VRAM, the next gen of cards isn’t going to help the VRAM limits of consumer cards.

NVLink support is up for review.

well, at today only one month to start review, not too bad. Other patches had more longer review time

different tile sizes for cpu and gpu when hybrid rendering would be great, it could be automatically calculated so that all cpu tiles combined would equal the speed of one gpu tile. At the moment, because they have to be the same size, once the last gpu tile has finished you’re often left waiting a long time for the much slower cpu tiles to complete, the problem worsens if you have a lot of cpu cores.

it would be nice if you could add defaults to all tools in blender. The cast modifier’s amount default value being set to 0.5 instead of 1 for example always bugs me.

First, I want to say that this fix https://developer.blender.org/rB77fdd189e4b22d2aa2410109a1a514d21f58d865 is greatly appreciated. It doesn’t fix all the issues, but it’s far better than was before.

If there is anything that I can show my support for, is the caching node or other form of caching for shaders, so they aren’t recalculated as a whole when user is making small changes. With complex materials it could speed up work a lot.

Hi, I was using the latest version of Blender Cycles with adaptive samples, I noticed that in any case it takes into consideration the totally transparent background, this seems useless and wasted time, probably there’s a something that I dont know, maybe Cycles can only consider the visible part, when transparent film is active?

It can’t know a pixel is transparent without actually sampling it. With adaptive sampling though you should only get the minimum number of samples on the transparent background area. You can verify this with the Sample Count pass.

Thanks, I did a test, with a cube, and Cycles seems not to perform any interaction, in the transparent areas, but without the adaptive sampling, and without any mesh it still passes through areas even with a scene without objects. this is what I meant.

A last question, how I can see and use the passes value with debug pass count?

Thanks, I did a test, with a cube, and Cycles seems not to perform any interaction, in the transparent areas, but without the adaptive sampling, and without any mesh it still passes through areas even with a scene without objects. this is what I meant.

Ah, yeah. Cycles has to do at least a few samples to know if an area is skippable. So adaptive sampling IS the “empty zone skip” function you’re looking for. It just does some other stuff too like reducing samples on solid areas that are noise-free.

A last question, how I can see and use the passes value with debug pass count?

It’s normalized by the number of max samples. Pixels with a value of 1.0 reached the full number of max samples, the darker/lower the value, the sooner they were cut off.

You can use adaptive sampling to basically skip empty backgrounds but it really only works on CPU rendering GPU does a bunch of samples before testing noise levels where as CPU can do it every sample

I saw this in @brecht weekly report:

Cycles

- Rename viewport denoise Fastest option to Automatic and extend tooltip

- Work towards final render denoising with OpenImageDenoise

and I’m wondering if the “final render denoising with oidn” means denoise as we use to do NLM, without compositing.

In this case I’d advice to make 3 different denoising levels. One for global denoising as the node allows now, one per “component” (all diffuse denoised, plus all glossy denoised, etc), and one per single pass (diffdirect denoised+diffindirect denoised multiplied by diffcolor, same for glossy, etc…).

This is because I’ve seen quite a good amount of detail preserving doing denoising at single pass level, so the user could choose the quality/speed/memory tradeoff. BTW there is an addon that does this in compositor (“SuperDenoiser” or similar). Just to say.

Also a slider to set the amount of denoising (default at 1.0) would be welcome.

I think so

Hi.

I would like a more dedicated exclusive Input node to work with geometry borders/edges and cavities (concavity, convexity) to be able to be used as “Factor” when working with shaders.

I know that there is AO, Layer weight, Pointness and workarounds, but really no user friendly setup or only node that allows to obtain this type of geometry Factor in a simple way.

Well, I’m curious about some functions that I don’t know if Cycles even has. For example, it seems that there is a specific type of solution for objects that rotate at high speed. Like the helix of a helicopter, for example. When you see things like that on video or in real life, the object may appear to have a different type of motion blur. I think I heard about it being set up in some renderer or game engine several years ago. I don’t even know if it’s really necessary.

Speaking of halos of light, sparkles. It seems that Cycles only generates it through post production filters. Is there a renderer that uses some calculation of the physics of light to generate this kind of brightness? There are several situations, in real life, where I see how complex this situation is. The glow in the sunlight, the moon and the stars; In the city lights at night; On certain surfaces such as water on a sunny day or in a well-lit environment, car surfaces, other metals, glittery objects, which also includes some types of car bodywork; Lights from different sources, even with only a glow effect; Bright lights through depth of field.

Is there a solution using light physics instead of filters?

Another similar question is how the physically correct materials are processed? For example, is there a technique aimed at scanning the luminous properties of a material? Like color, brightness, reflection and scattering of light … It would be the equivalent of scanning a 3D object or photogrammetry instead of modeling it based on an image.

It also makes me curious about the light reflected from certain surfaces. Especially speaking of eyeballs. From humans or animals. An animal filmed by a camera, especially at night, has a clear glow in its eyes due to the return of light over the retina. Not that I understand how it works. But even when we see someone’s eyes up close, it seems that part of that small image formed on the surface of the person’s eye comes from a light projected from inside the eye. As if it were not 100% reflection of the environment around the person in the crystalline part that surrounds the eyeball.

And I wanted to know how outdated this 2015 Blender Guru post is:

Even more in the hair part.

Very outdated:

These are not “the rest” of render engines rigth now, LuxRender does not exist anymore, now it’s LuxCore and it’s very different and way faster to what it used to be, also way more powerful, specially in the ArchViz realm.

There is no mention to Corona, RedShift or Eevee (even when Eevee is not a path tracer it’s being used as render engine too).

At that time there was no “Principled Hair” shader on Cycles and a lot of other features in both, cycles and the other engines.

Besides all that the test was, IMHO, very biased due to the lack of deep knowledge of the tested engines, one example is the “sample” of hair with Vray was terrible, while Vray has been able to render amazing hair since way more than 2015.

So not a good test to be trusted IMHO

You are confusing different types of motion blur, fake ones and “real” ones, for post production motion blur there is a possibility to use “curved” extrapolation of the motion vectors and it will deliver a more “true” result, while with proper true motion blur it depends on the previous and next frames and samples added, so in general Cycles use the true motion blur unless you just save motion vectors and generate the motion blur through post.

All the render engines generate this in post production, some have a simple post production interface to generate it just after the render, others don’t, in Cycles you can wit the compositing editor of Blender, but there is no engine to my knowledge that generates this kind of effects rendering them, it would be a waste of computing time IMHO.

All the effects you mention are lens effects, imperfections and are done in post.

Even in real life photography you use lens filters to get this effects under control, otherwise it’s your lens has bad quality.

You can get that, if you model the eye as it’s supposed to be, the reflection is not coming from the retina but from the interior of the eye ball or specifics parts of the animal’s eye, for example in humans it’s “red eyes” because the light reflects in our blood, in cats the light is reflected in a layer called “tapetum lucidum”, or shining layer, it’s part of their eyes, if you want fully physically correct things you have to make them fully physically correct ![]() (or fake them… as we all do hahaha)

(or fake them… as we all do hahaha)

Now the reflection of the environment comes from a different layer of the eye, it’s easy to investigate it, there is lots of information out there, both scientifically speaking or for 3D production.

And I already answered you the hair part in the other post.