This is why I thought of mipmaps when I read the first post, the variants concept sounds very cool and useful in theory, but if memory and rendering performance aren’t a priority for the design then the actual usefulness of the variants will be limited to small/simple scenes only.

And Blender already suffers from poor memory and rendering performance as it is, so I’d assume RAM use and overall performance have to be a big part of the design ![]()

![]()

Given that Blender is aiming to align it’s notion of Variants with USD Variants, looking at them through the lens of LOD management and mipmapping is a misstep. USD VariantSets are heavily design-oriented; they’re full composition arcs, with nesting and dynamic insertion features which aren’t a good fit for performantly managing LODs at render time. Variants work as an ad-hoc way to store LODs on disk, but that’s it — and that’s without getting into the complexities of HLODs.

But that’s also why the idea of having a generic Representation axis is so interesting. If Blender goes the route of building Representations as a sort of conceptual extension of Purposes, and then builds a LOD system on top of that, they’ll be in a good position to deliver performance optimizations without running directly contrary to the USD spec.

For the LODs deisgn is can be cool if is can be have a fully automatic mode, like automatics LODs generation from LODs 0 to LODs 5 for exemple or a custom range between lods 0 and finish lods 10 with each LODs decimate 10% of topology… (Or a custom value imputed by user) or just a fully automatics things ![]()

And for the transition a smooth transition between each changement of LODs, so in facts this thing (And again for the distance and LoDs generation in facts, a system fully automatics propel by camera view / distance) : Locally-Adaptive Level-of-Detail for Hardware-Accelerated Ray Tracing

See my RClight : Right-Click Select — Blender Community

Translation: Geometric nodes can be used to import a Collection and perform some distance calculations.

Could Variants be interesting for modelling?

One idea is to have different body parts, and ‘weld’ selected parts to a single mesh.

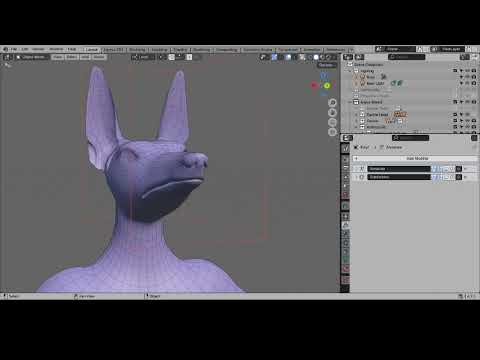

Here is an example video.

Welding meshes in Blender. Welding the same canine head on different bodies.

May Variants be useful in a ‘welding’ workflow?

For the effects in the video, I guess it’s simply extrude and merge on the basis of both open loops having same amount of points.

Re: Image representations

The best way of looking at this is: do you want to download the 8K, a 4K or 2K version of a HDRI map?

Mipmap, caching and rendering performance is orthogonal to having different representations for the online assets.

I’ve seen it used before, do you mind clarifying for me what orthogonal means in this context ?

To phrase it in USD terms, would it be fair to frame those image representations as payloads behind a URI-based AssetResolver? Would information about the unloaded representations be present in the stage, or would it all be on the AssetResolver to control what’s downloaded from the asset server? You can get a decent equivalent to a multi-representation asset loading system by pushing the work onto the AssetResolver, but it ends up only being a loading system; it doesn’t really give you anything to build off from in the composed stage itself.

I don’t expect Blender’s Asset system to be a pure subset of USD functionality, but the LOD schema proposal is headed in the direction of a more generalized representation system and I’m curious to see where some of the ideas might overlap.

Its WiP, but you can try it.

Not sure it belongs here, I message the file privately to you.

This will be great. I’m currently tinkering with Reality Composer Pro and VisionOS. Apple has been making Blender a part of their demonstrations btw. ![]()

I just went over some material on USD variants. This feature has become a core part of RealityKit and VisionOS. Being able to build production ready USD’s right out of Blender will be amazing. I landed here, because I was trying to find a way to utilize USD variants on Mac without running command lines. I haven’t found one yet. I have a project using the same geometry for 10 different variations. I don’t currently have an easy way to make those variants in a USD.